Data lake

A data lake is a large, unstructured pool of data where there is no need to structure the data upfront. Data lakes are effective for storing massive amounts of data, particularly when the intended use of the data is uncertain.Why are organisations interested in using data lakes?Data lakes encourage organizations to store and process data in its raw format, which can be beneficial for the analytics process. This approach is well-suited for big data analytics, machine learning, and real-time data processing.

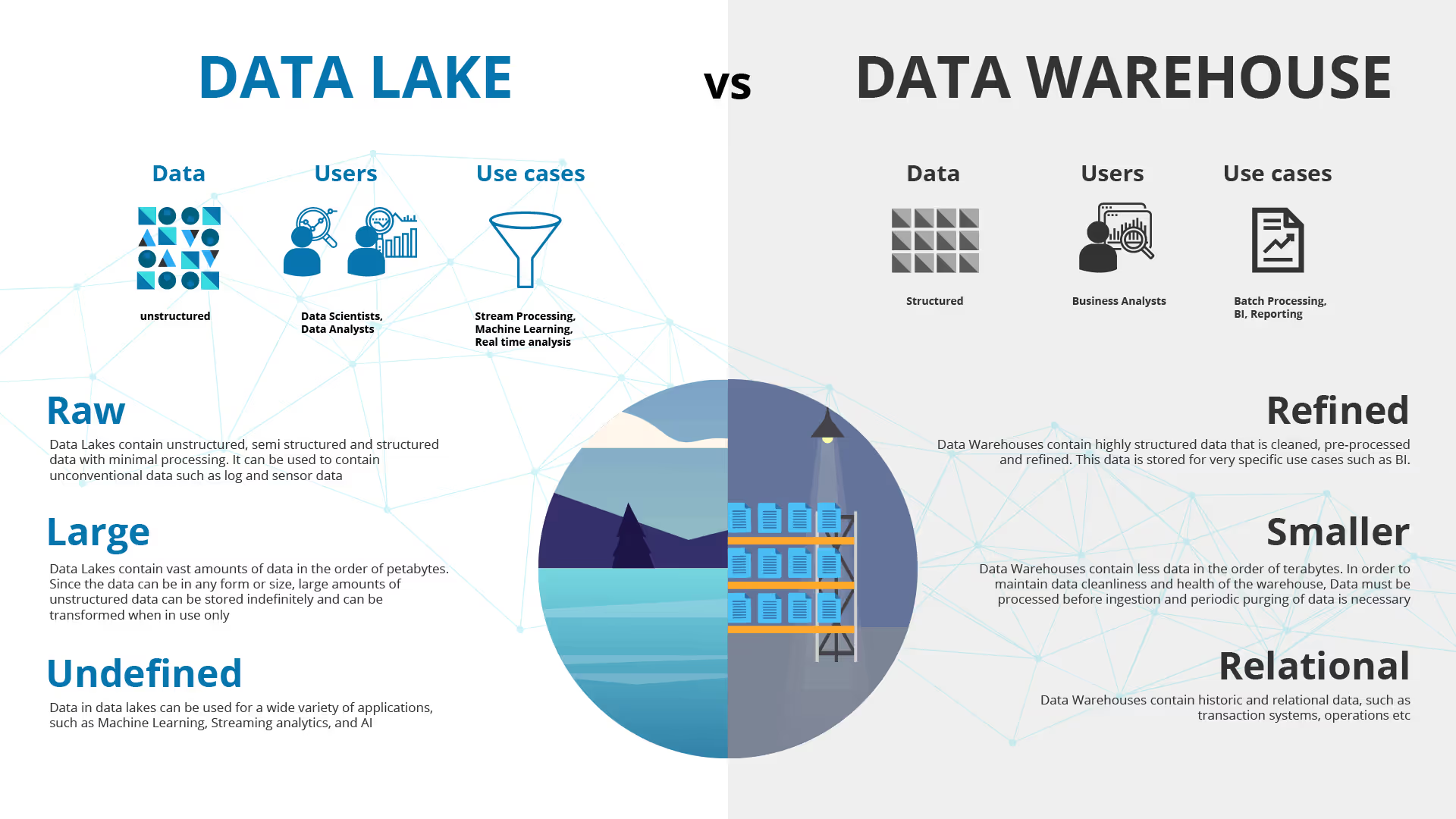

What’s the difference between data lake vs. data warehouseA data lake stores data in its raw form and is flexible. A data warehouse is structured and optimised for queries and reporting.

What is a Data Lake?

A data lake is a large, unstructured pool of data where there is no need to structure the data upfront. Data lakes are effective for storing massive amounts of data, particularly when the intended use of the data is uncertain.

Why are organisations interested in using data lakes?

Data lakes encourage organizations to store and process data in its raw format, which can be beneficial for the analytics process. This approach is well-suited for big data analytics, machine learning, and real-time data processing.

What's the difference between data lake vs. data warehouse?

A data lake stores data in its raw form and is flexible. A data warehouse is structured and optimised for queries and reporting.

How to build a data lake?

Data Lake creation has broadly 5 stages:

- Set up storage for final data to go like Redshift, Snowflake, Databricks

- Move data through pipelines such as Airflow, Prefect

- Cleanse, prep, and catalog data through tools like dbt

- Configure and enforce security and compliance policies for the infrastructure and data lake

- Make data available for analytics through visualisation/alerting tools like Looker, Superset, Matabase