Kafka

Apache Kafka is an open-source distributed event streaming platform, originally developed at LinkedIn and open-sourced in early 2011. It's designed for high throughput, scalability, and fault tolerance, making it a go-to solution for engineers working with real-time data pipelines and streaming applications.Key Features:- Distributed System: Kafka runs as a cluster on one or more servers, supporting partitioning and replication of data streams among the cluster nodes. This design ensures high availability and durability.- Publish-Subscribe Model: At its core, Kafka implements a publish-subscribe messaging system. Producers publish data records to topics, and consumers subscribe to these topics to process the streamed records. This model allows Kafka to decouple data pipelines, enabling complex processing architectures.-Fault-Tolerance and Durability: Kafka maintains data redundancy and resilience through replication and maintains records even after they have been processed, ensuring no data loss in case of system failures.-High Throughput: Kafka's performance is optimized to handle high volumes of data, supporting thousands of messages per second, making it ideal for big data applications.

What is Kafka?

Apache Kafka is an open-source distributed event streaming platform, originally developed at LinkedIn and open-sourced in early 2011. It's designed for high throughput, scalability, and fault tolerance, making it a go-to solution for engineers working with real-time data pipelines and streaming applications.Key Features:- Distributed System: Kafka runs as a cluster on one or more servers, supporting partitioning and replication of data streams among the cluster nodes. This design ensures high availability and durability.- Publish-Subscribe Model: At its core, Kafka implements a publish-subscribe messaging system. Producers publish data records to topics, and consumers subscribe to these topics to process the streamed records. This model allows Kafka to decouple data pipelines, enabling complex processing architectures.-Fault-Tolerance and Durability: Kafka maintains data redundancy and resilience through replication and maintains records even after they have been processed, ensuring no data loss in case of system failures.-High Throughput: Kafka's performance is optimized to handle high volumes of data, supporting thousands of messages per second, making it ideal for big data applications.

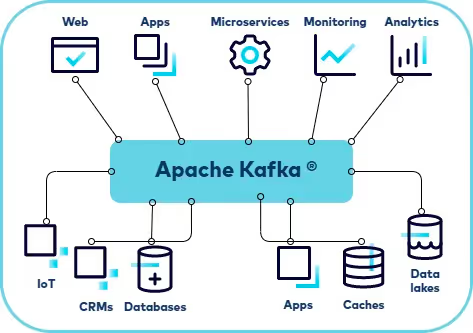

Common Use Cases:- Real-Time Analytics: Kafka is extensively used to build real-time analytical systems that can process and analyze data as it arrives.- Log Aggregation: It provides a centralized system for collecting and aggregating logs from various services, simplifying log analysis and monitoring.- Stream Processing: Kafka can be used in tandem with stream processing frameworks (like Apache Flink or Kafka Streams) for complex processing and transformations of streaming data.- Event Sourcing: Kafka can serve as the backbone for event sourcing architectures, where changes to application state are logged as a sequence of events.Scalability and Integration:- Kafka's distributed nature makes it highly scalable – you can add more nodes to the Kafka cluster to increase capacity.- It integrates well with a variety of data sources, processing frameworks, and data storage systems, making it a versatile tool in a modern data ecosystem.

In summary, Apache Kafka's robust, scalable, and flexible architecture makes it an essential component in handling high-throughput, real-time data scenarios in various domains, from e-commerce and finance to IoT and logistics.

Image source: https://medium.com/@surajit.das0320/kafka-architecture-a5a014197df7