Introduction to Top 13 LLM Gateways

Deploying Large Language Models (LLMs) in production is increasingly complex, particularly with the rising demand for AI and LLM-driven APIs. Gartner predicts that by 2026, over 30% of the growth in API demand will be fueled by AI and LLM tools, underscoring the critical need for efficient model management.

One of the key challenges in this landscape is the difficulty of switching between different models like GPT-4 and Anthropic, each with its own unique API. This often requires significant changes to application code, which can be time-consuming and inefficient.

LLM Gateways offer a solution by unifying API access, allowing seamless transitions between various models without modifying the underlying code. These gateways enable businesses to integrate and switch between LLMs effortlessly, maximizing flexibility and efficiency in AI deployments.

In this blog, we’ll explore the top 10 LLM Gateways that are changing how businesses use LLMs in their operations.

Key Features to Consider When Choosing an LLM Gateway

When evaluating tools in the LLM Gateway category, consider the following features to ensure you select a platform that meets your needs:

Single Unified API for LLM Providers

Look for a tool that offers a unified API to interact seamlessly with multiple LLM providers, simplifying integration and reducing the need for custom code.

Effective Monitoring

The tool should provide robust monitoring capabilities, including logging and tracking interactions with the models. This allows you to analyze performance, detect issues, and maintain control over your LLM usage.

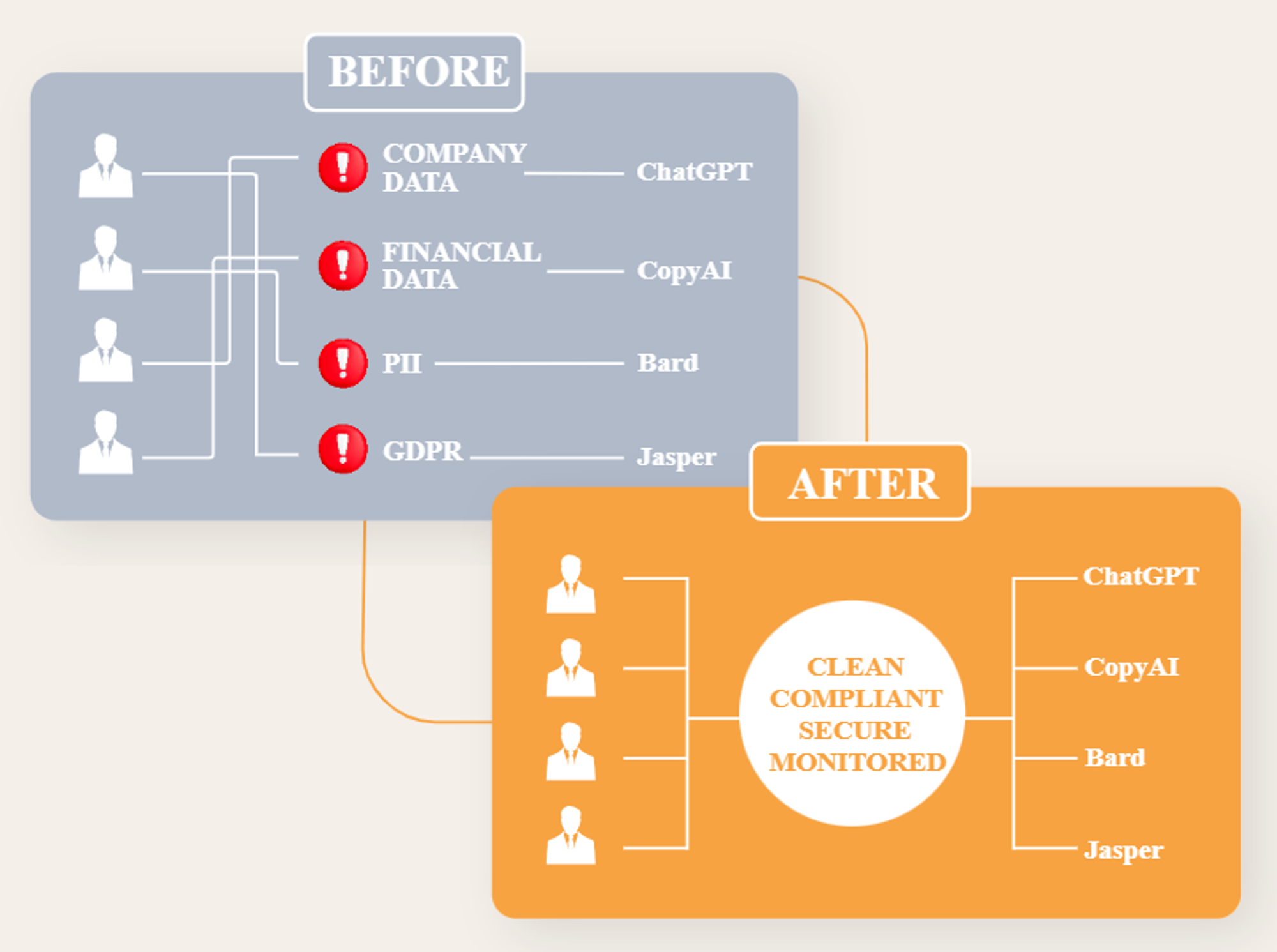

Enhanced Security

Security is paramount. Choose a gateway that centralizes access control, manages secrets, and masks sensitive information before sending requests to LLMs. Additionally, it should enforce Role-Based Access Control (RBAC) to limit access to authorized users.

High Reliability and Availability

Ensure the gateway offers high reliability, with features like automatic retries and rerouting of requests to alternative models in case of failures. This guarantees consistent performance and uptime.

Prompts Management and Templating

The tool should support comprehensive prompt management, including the ability to create, edit, save, and version control prompts. It should also allow for rules management and grouping to streamline prompt usage.

Chaining of Prompts

For complex interactions, the ability to chain prompts is crucial. This feature helps maintain context over multiple interactions, enabling more coherent and meaningful conversations.

Fine-Tuning LLMs per Domain per Customer

The gateway should allow for the fine-tuning of LLMs using customer-specific or domain-specific datasets. This ensures that the models deliver more relevant and accurate responses tailored to specific needs.

Contextual Sensitivity and Personalization

Choose a tool that supports contextual sensitivity and personalization, allowing the LLM to adapt responses based on the user's context and preferences.

Cost Management

Effective cost management features are essential, including tracking usage, forecasting costs, and optimizing resource allocation to avoid overspending.

Custom Model Support

The gateway should support custom models, allowing you to integrate and manage proprietary or specialized LLMs alongside standard offerings.

Caching

Caching capabilities can significantly improve performance by storing frequent queries and responses, reducing the need for repeated processing and saving costs on API calls.

By considering these features, you can choose an LLM Gateway that optimizes your operations, enhances security, and delivers the best possible performance for your specific needs.

List of Top 13 LLM Gateways

- BiFrost By Maxim AI

- Portkey AI Gateway

- Kong AI Gateway

- AI Gateway for Cloudflare

- Gloo Gateway

- Aisera’s LLM Gateway

- LiteLLM

- AI Gateway for IBM API Connect

- LMstudio

- MLflow AI Gateway

- Wealthsimple LLM Gateway

- AI-gateway by GitLab

- aigateway.app

- Tyk API Gateway (can be extended to AI)

Analysis of All the Top 13 LLM Gateways

Tools

BiFrost

OpenSource LLM Gateway with built-in OTel observability and MCP gateway

Benefits

* Performance: Adds only 11µs latency while handling 5,000+ RPS (as per Github)

* Reliability: 100% uptime with automatic provider failover

* Native MCP (Model Context Protocol) support for seamless tool integration

* Easy 1-click onboarding & Setup

Pricing

Open Source

Relevant Links

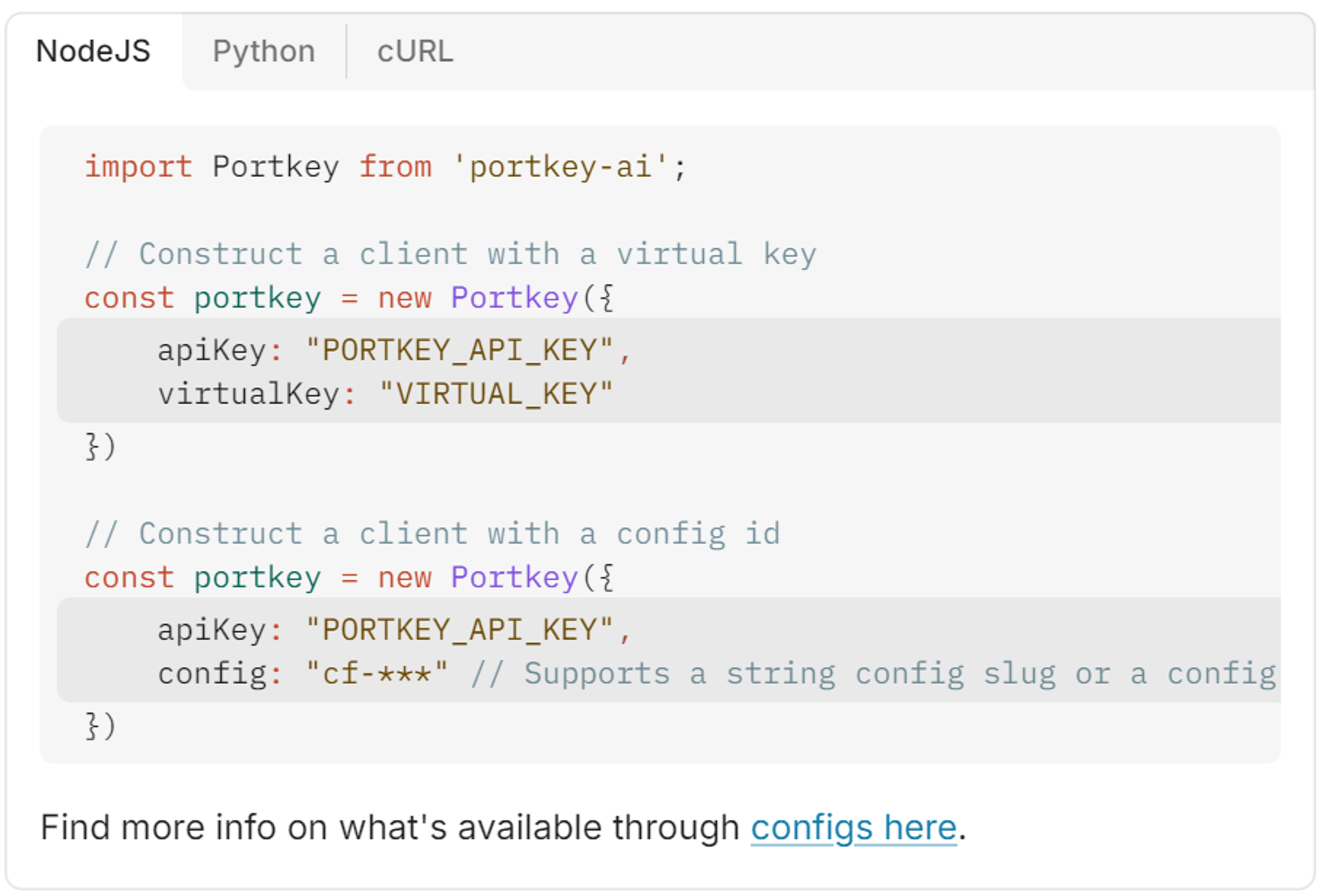

Portkey

Portkey AI Gateway is a robust platform designed to simplify and streamline access to multiple Large Language Models (LLMs) through a unified API. It offers enhanced monitoring, security, and cost management features, making it an ideal choice for businesses looking to manage LLMs efficiently across different providers.

Pricing

Portkey AI Gateway offers flexible pricing plans based on the specific needs and scale of your operations. It’s free plan offers 10k Logs/Month.

Relevant Links

Documentation: https://portkey.ai/docs

Community: https://discord.gg/DD7vgKK299

Product Demo: https://www.youtube.com/watch?v=9aO340Hew2I&t=4s

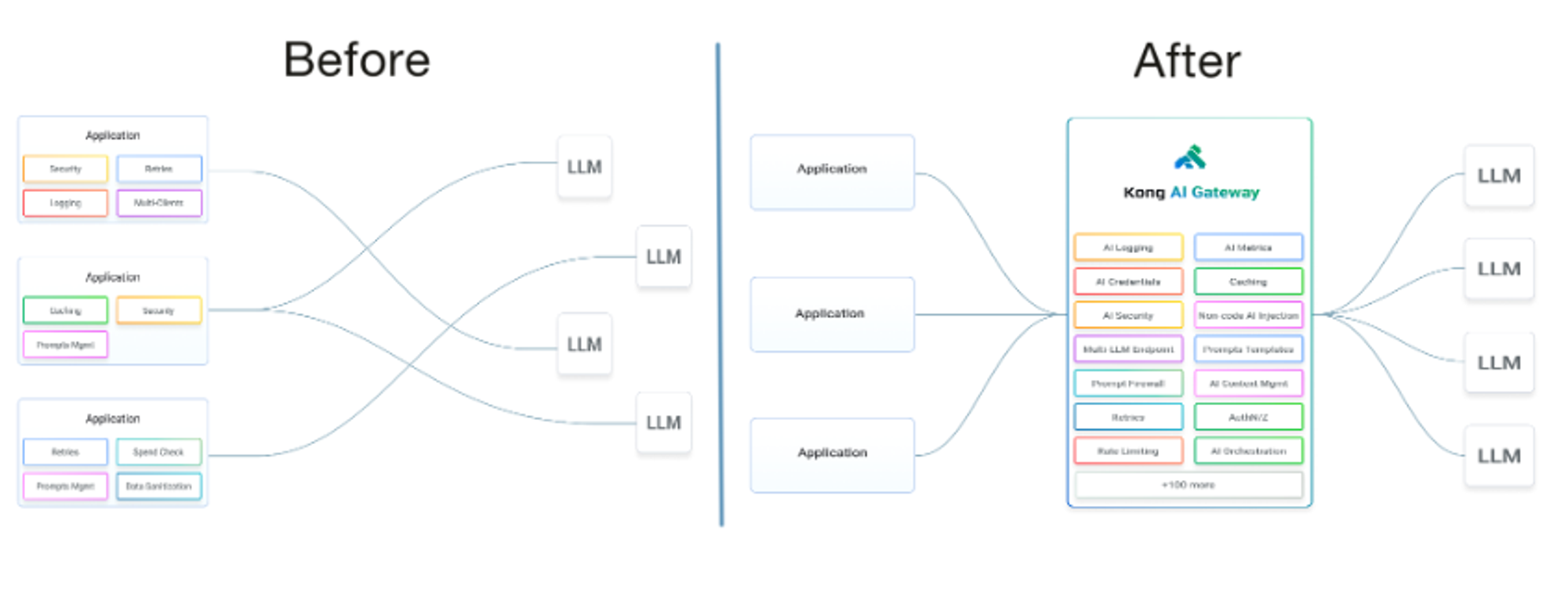

Kong

Kong Gateway's extensive API management capabilities and plugin-based extensibility make it ideal for providing AI-specific API management and governance.

Benefits

The AI Gateway offers a standardized API layer, enabling clients to access multiple AI services from a unified codebase, despite the lack of standard API specifications among AI providers. It also enhances AI service management with features like credential management, usage monitoring, governance, and prompt engineering. Developers can leverage no-code AI Plugins to enrich existing API traffic, seamlessly boosting application functionality.

Considerations

These AI Gateway features can be activated through specialized plugins, using the same model as other Kong Gateway plugins. This allows Kong Gateway users to quickly build a robust AI management platform without needing custom code or unfamiliar tools.

Pricing

There is no estimation of price by Kong AI Gateway.

Relevant Links

Kong Gateway Docs: https://docs.konghq.com/gateway/latest/

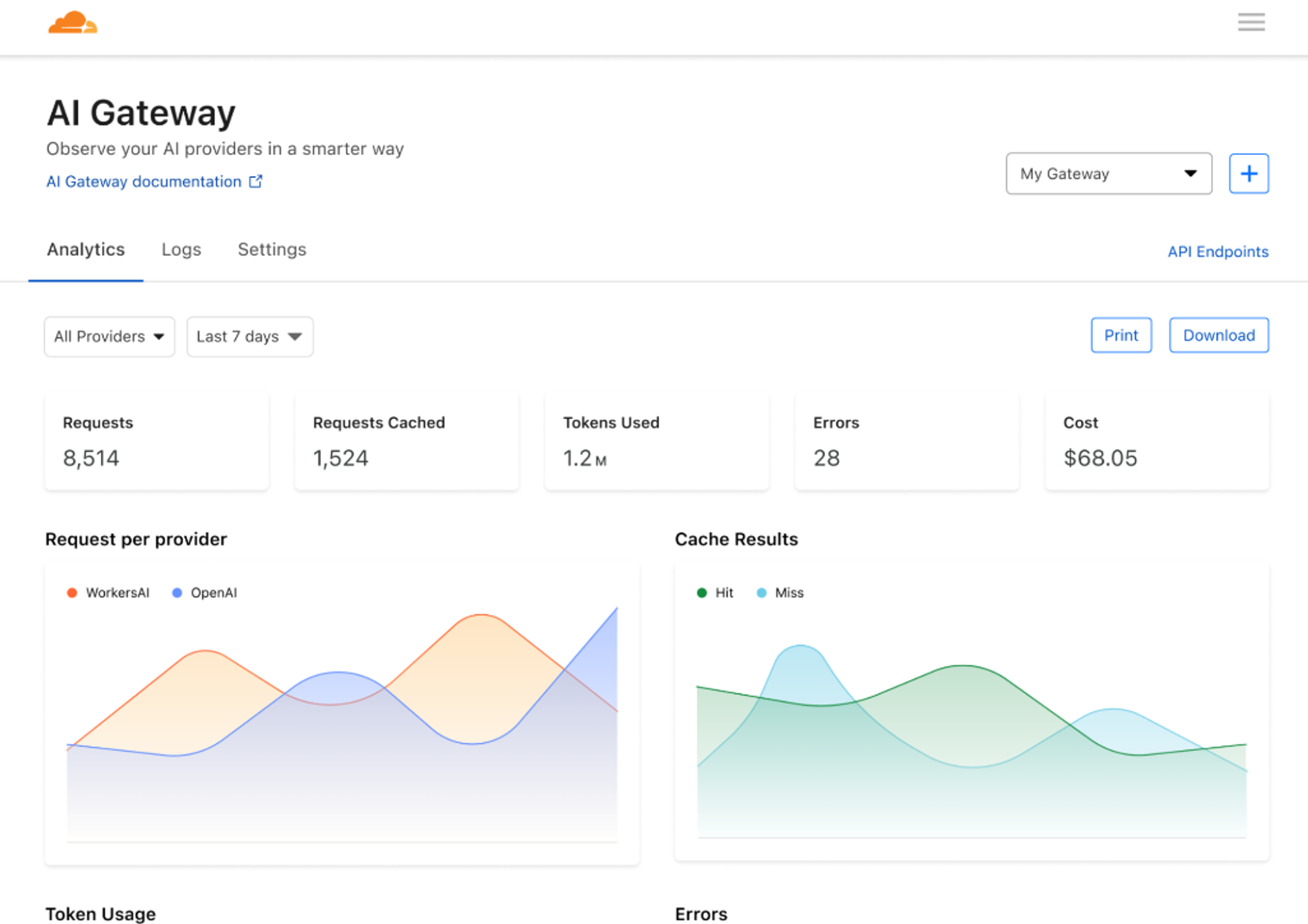

Cloudflare

The Cloudflare AI Gateway acts as an intermediary between your application and the AI APIs it interacts with, such as OpenAI. It helps by caching responses, managing and retrying requests, and providing detailed analytics for monitoring and tracking usage.

Benefits

By taking care of these common AI application tasks, the AI Gateway reduces the engineering workload, allowing you to focus on building your application.

Pricing

Cloudflare offers a free plan with basic features, while paid plans start at $20 per month for more advanced options.

Relevant Links

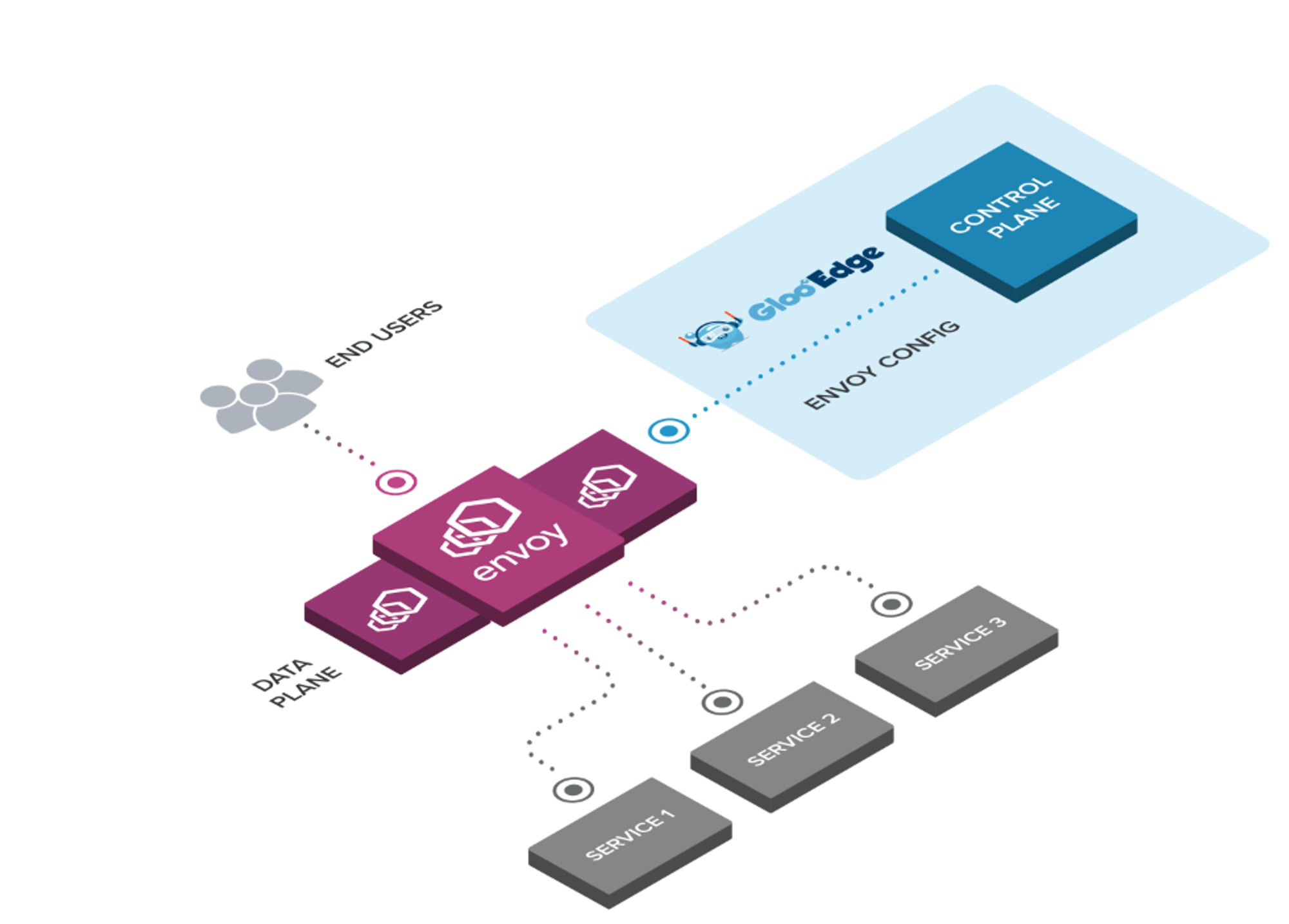

Gloo Gateway

Gloo Gateway is a powerful, Kubernetes-native ingress controller and next-gen API gateway. It stands out for its advanced function-level routing, support for legacy applications, microservices, and serverless architectures, and strong discovery capabilities.

Benefits

With numerous features and seamless integration with top open-source projects, Gloo Gateway is specifically designed to support hybrid applications, allowing different technologies, architectures, protocols, and cloud environments to work together.

Pricing

Solo.io offers customized pricing for Gloo Gateway based on your specific needs and usage.

Relevant Links

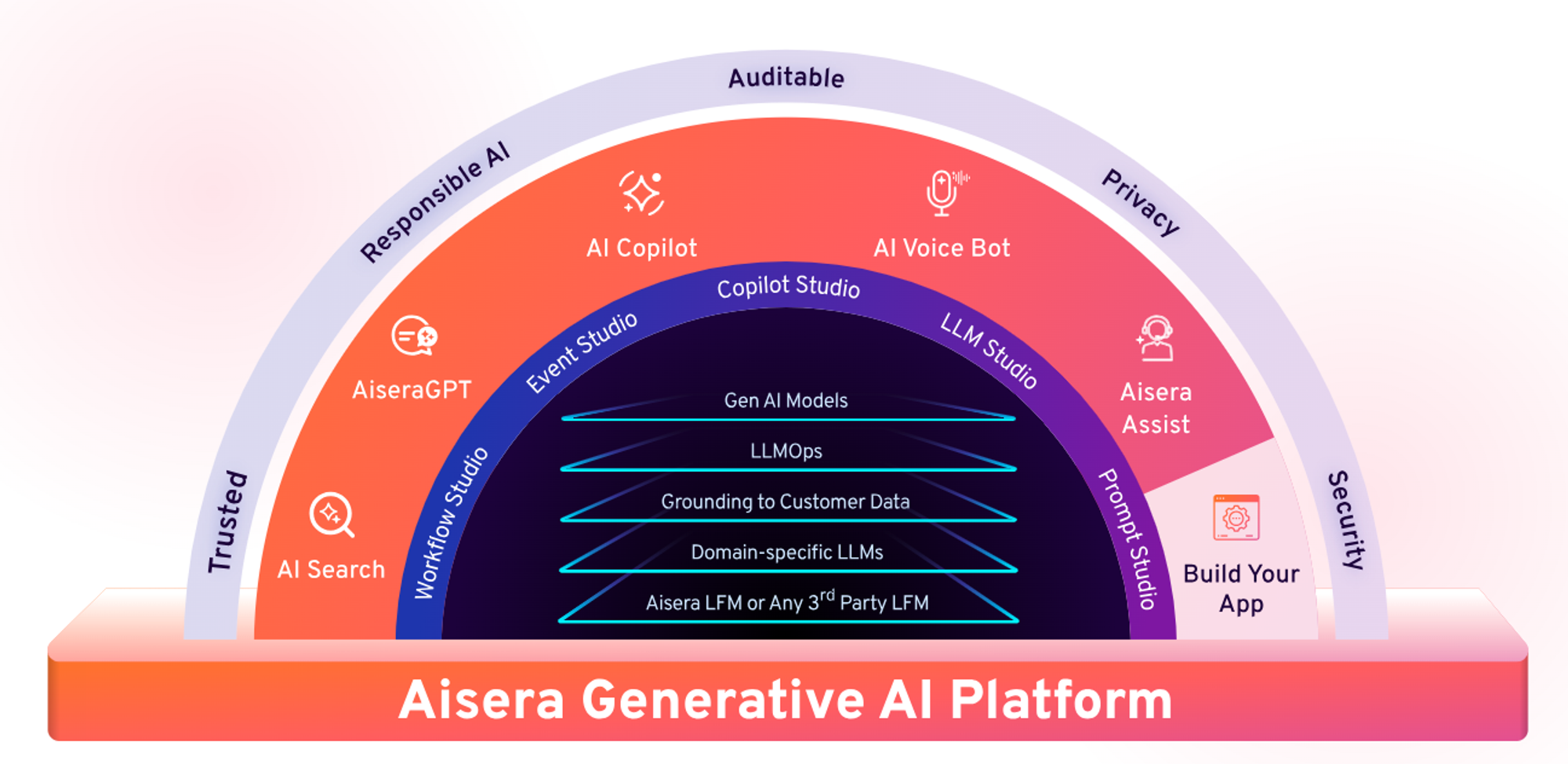

Aisera

Aisera’s LLM Gateway seamlessly integrates any LLM into its AI Service Experience platform, transforming it into a Generative AI app or AI Copilot using AiseraGPT and Generative AI.

Benefits

This allows for a deep understanding of domain-specific nuances and automates complex tasks through AI-driven action workflows, where chatbots evolve into action-oriented bots.

Pricing

There is no estimation of price by Aisera’s LLM Gateway

Relevant Links

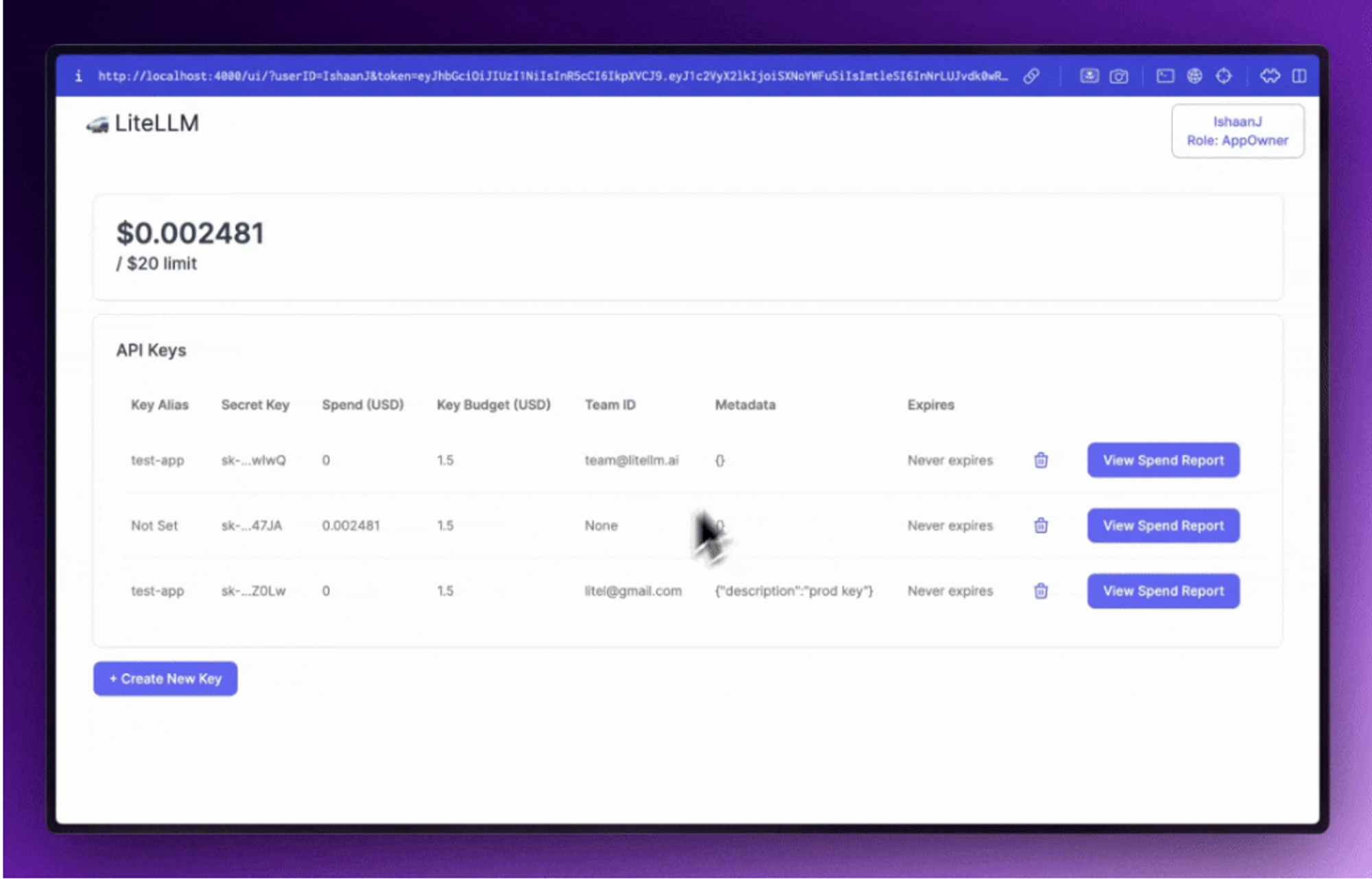

LiteLLM

LiteLLM, short for "Lightweight Large Language Model Library," streamlines the use of advanced AI models by acting as a versatile gateway to various state-of-the-art AI models.

Benefits

It provides a unified interface, allowing you to easily access and utilize different AI models for tasks like writing, comprehension, and image creation, regardless of the provider.

Considerations

LiteLLM integrates seamlessly with leading providers such as OpenAI, Azure, Cohere, and Hugging Face, offering a simplified and consistent experience for leveraging AI in your projects.

Pricing

LiteLLM offers a free plan with basic features, while paid plans start at $19 per month, providing additional capabilities.

Relevant Links

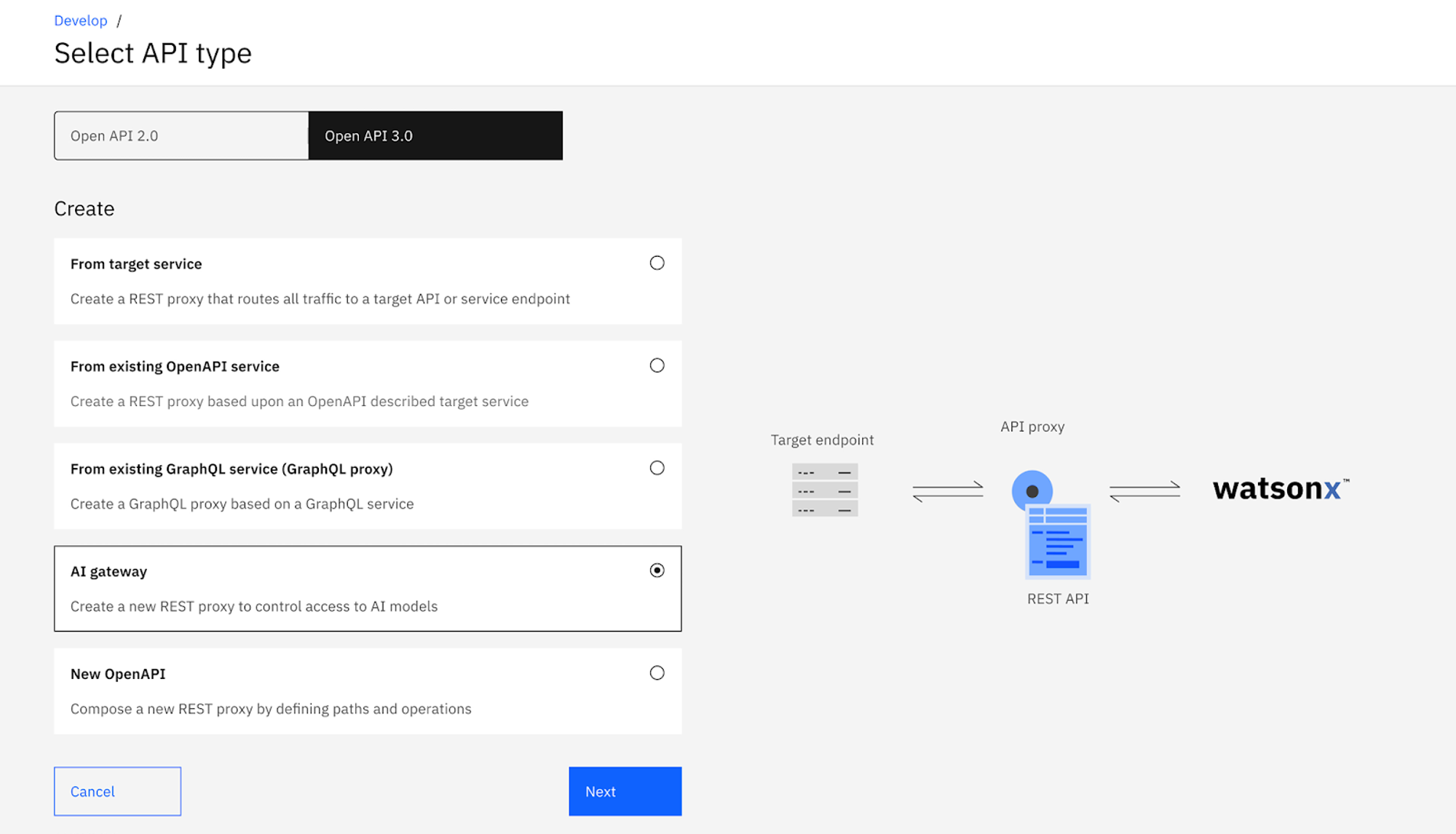

IBM API Connect

The AI Gateway in IBM API Connect is a feature that allows organizations to securely integrate AI-powered APIs into their applications. This gateway facilitates the seamless connection between your applications and AI services, both within and outside your organization.

Considerations

You can address unexpected or excessive AI service costs by limiting the rate of requests within a set time frame and caching AI responses. Built-in analytics and dashboards provide visibility into the enterprise-wide use of AI APIs.

By routing LLM API traffic through the AI Gateway, you can centrally manage AI services with policy enforcement, data encryption, sensitive data masking, access control, and audit trails, all of which support your compliance requirements.

Pricing

IBM API Connect offers a variety of pricing options tailored to different business needs, including subscription and pay-as-you-go models. Pricing details depend on the deployment type (cloud, on-premises, or hybrid) and specific usage requirements.

Relevant Links

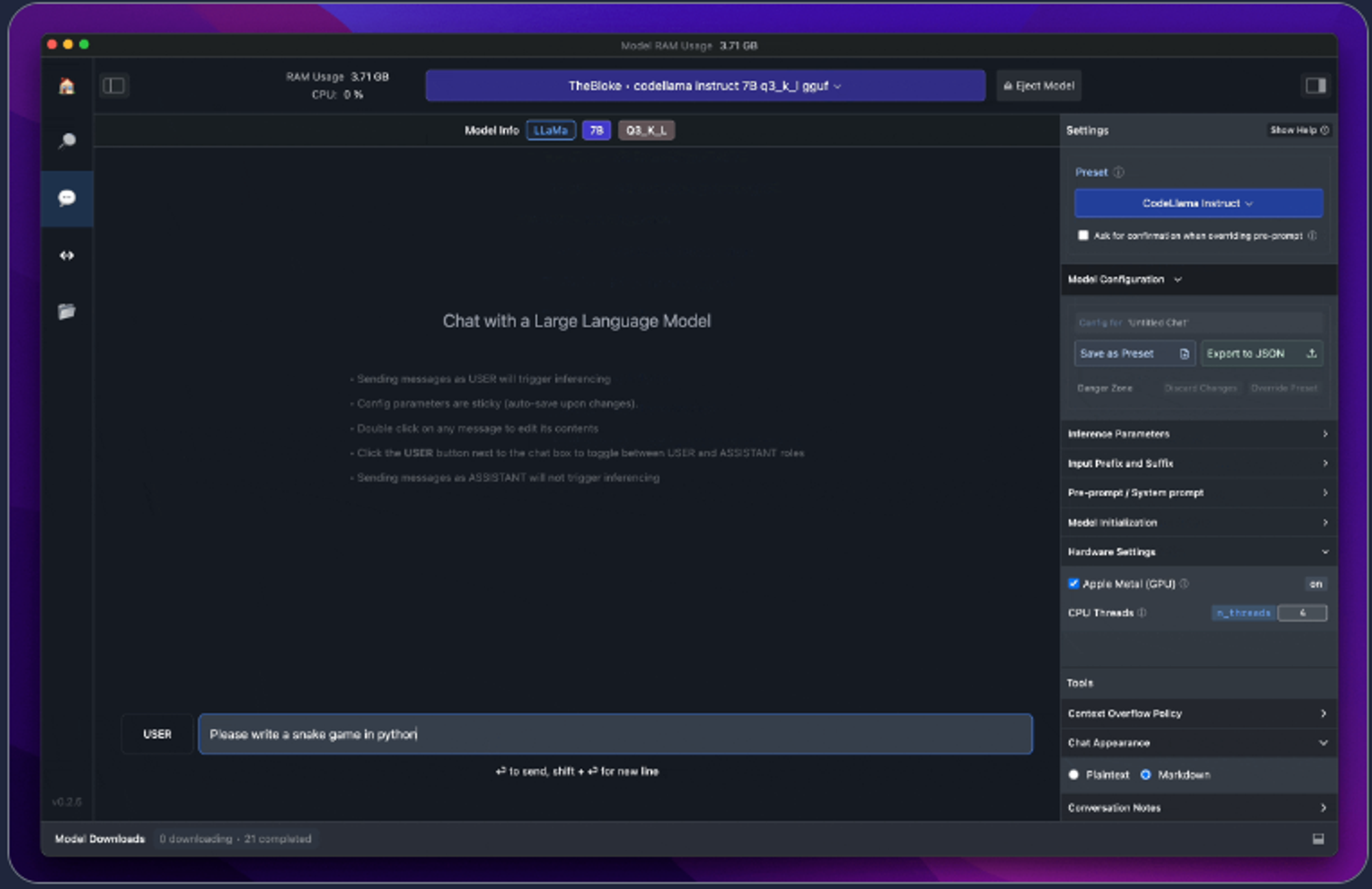

LM Studio

LM Studio is a platform designed to help you easily fine-tune and deploy large language models (LLMs) with a user-friendly interface. It simplifies the process of customizing LLMs, making it accessible even if you don't have extensive AI or machine learning expertise.

Benefits

With LM Studio, you can leverage pre-trained models and tailor them to specific use cases or domains, deploy them efficiently, and manage their performance through intuitive tools and dashboards.

Pricing

Pricing has not been mentioned by LM Studio on the website.

Relevant Links

MLflow

One of the key benefits of the MLflow AI Gateway is its centralized management of API keys. By securely storing these keys in one location, the service enhances security by reducing the risk of exposing sensitive information across the system. This approach also eliminates the need for embedding API keys in code or requiring end-users to handle them, thus minimizing security vulnerabilities.

Considerations

The gateway is designed to be flexible, allowing easy updates to configuration files for defining and managing routes. This adaptability ensures that new LLM providers or types can be incorporated into the system without necessitating changes to the applications interacting with the gateway. This makes the MLflow AI Gateway service particularly valuable in dynamic environments where quick adaptation is essential.

Overall, the MLflow AI Gateway service offers a simplified and secure approach to managing LLM interactions, making it an excellent choice for organizations that frequently utilize these models.

WealthSimple

The Wealthsimple LLM Gateway is an internal tool developed by Wealthsimple to securely and reliably manage interactions with Large Language Models (LLMs) like OpenAI and Cohere.

Benefits

Additionally, the gateway helps mitigate issues of accuracy at the application level, such as reducing the occurrence of "hallucinations" where LLMs generate incorrect or nonsensical outputs. The gateway consists of two main components: a recreated chat frontend that restricts data sharing to the API, and an API wrapper that interfaces with LLM endpoints, with plans to support more providers in the future.

Considerations

Since its internal launch in April 2023, the Wealthsimple LLM Gateway has facilitated over 72,000 requests for various use cases, including code generation, content editing, and general inquiries, allowing Wealthsimple employees to explore LLM technology responsibly.

Pricing

Specific pricing details are not provided on their official website

Relevant Links

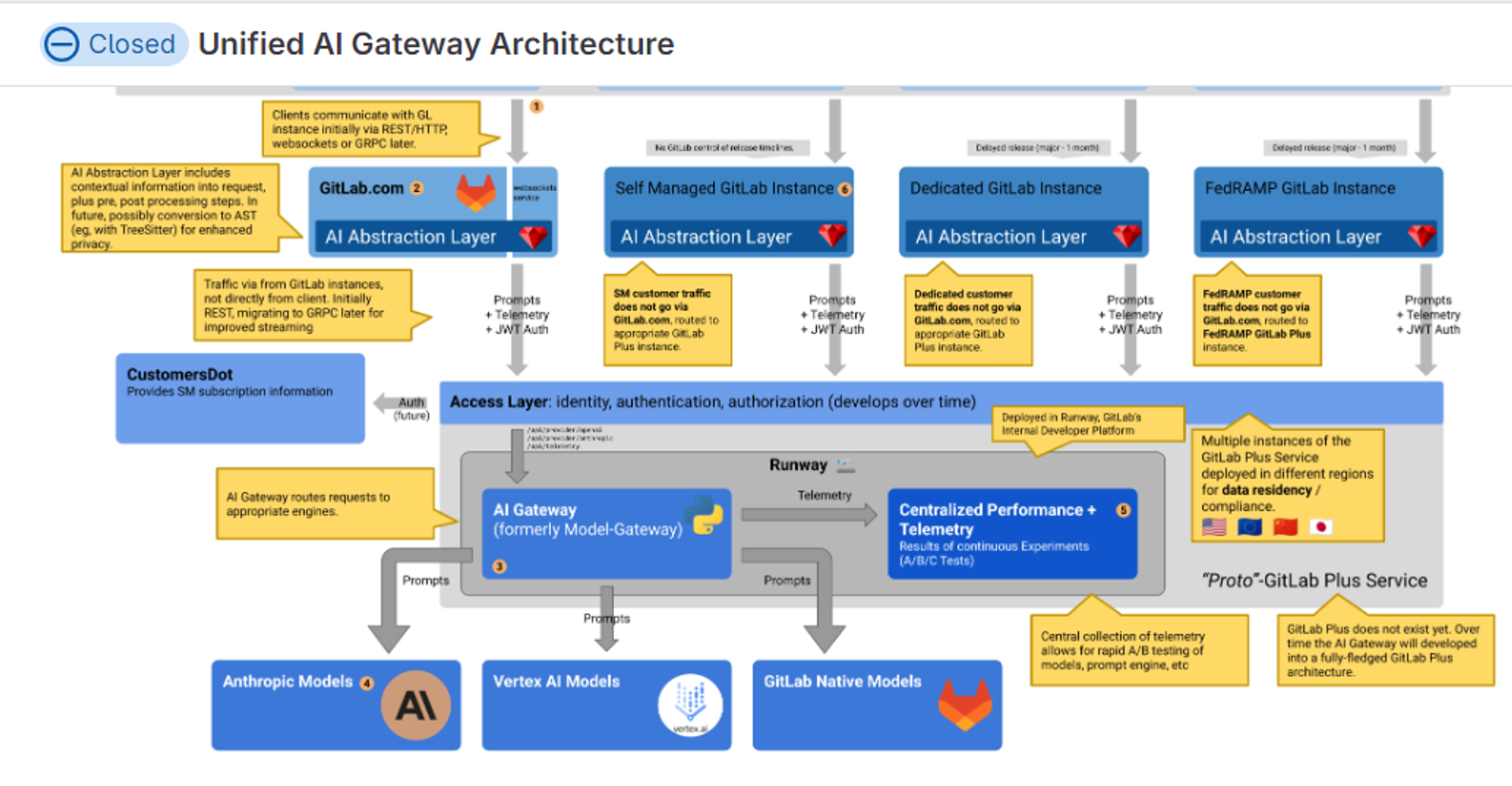

GitLab

The AI Gateway by GitLab is a standalone service designed to provide seamless access to AI features for all GitLab users, regardless of their instance type (self-managed, dedicated, or GitLab.com).

Benefits

It acts as a centralized point for managing AI integrations, offering a high-level interface to interact with various AI services securely and efficiently. The AI Gateway also supports policy enforcement, data encryption, and other security measures to ensure that AI services are utilized responsibly and in compliance with organizational requirements.

Pricing

The pricing details for the AI Gateway by GitLab are not explicitly listed on the GitLab website.

Relevant Links

aigateway.app

It allows users to integrate multiple AI services through a single API, simplifying the process of switching between different models without the need to modify application code.

Considerations

This platform is designed to enhance efficiency in deploying AI models by providing features such as centralized API management, security controls, and cost optimization. AI Gateway.app is particularly useful for developers and organizations that need to work with multiple AI providers and want to streamline their AI integration processes.

Pricing

AI Gateway.app offers a Free plan, with paid plans starting at $9/month, and custom enterprise options available.

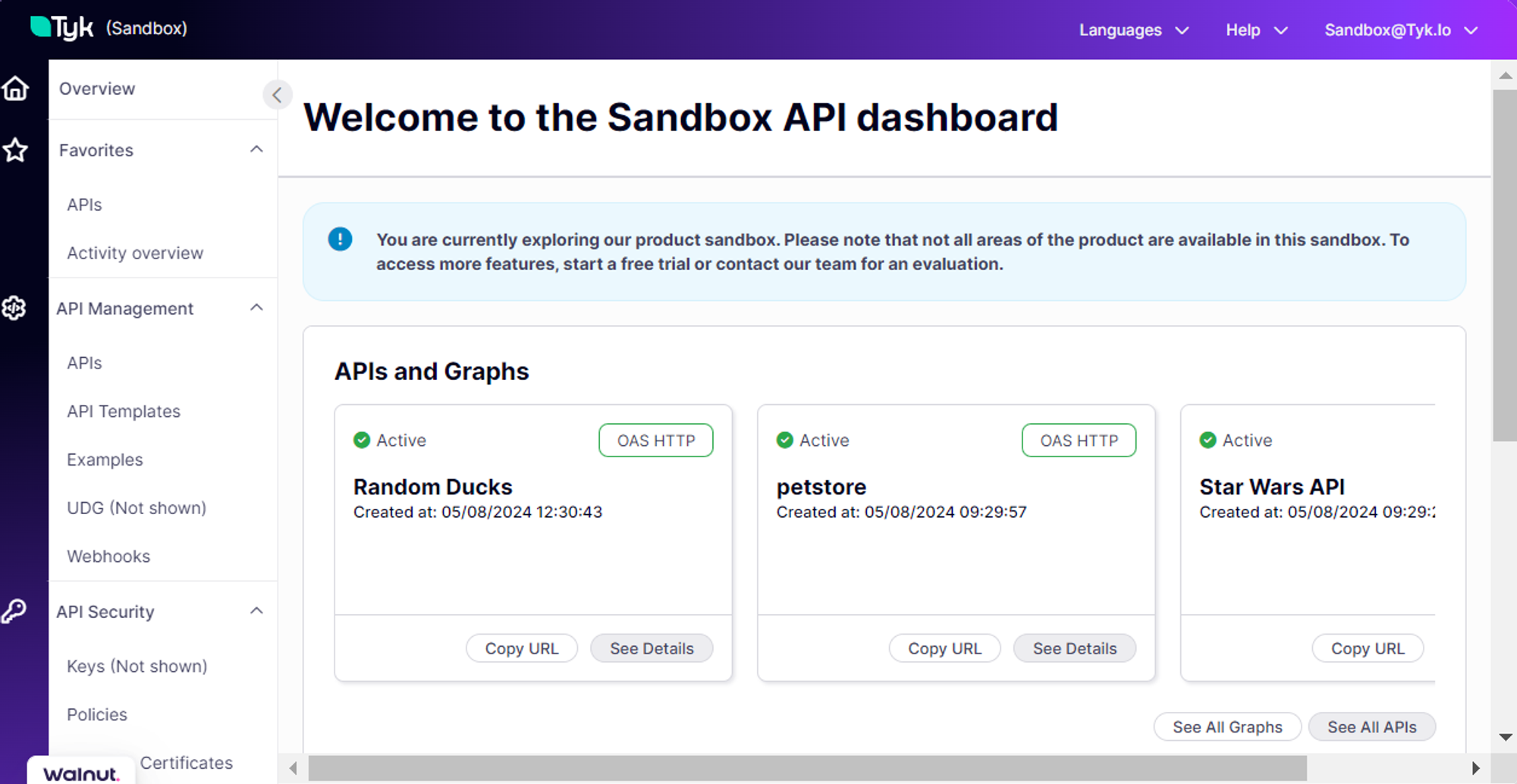

Tyk

TYK is an open-source API management platform that provides tools for designing, deploying, and managing APIs securely and efficiently.

Benefits

TYK leverages AI for API design by integrating AI technologies to streamline and automate the API development process. Here’s how they use AI:

- Automated API Generation: AI can analyze existing data models, documentation, or even live traffic to suggest or generate API endpoints automatically, reducing the manual effort required to design APIs.

- Intelligent Recommendations: AI provides recommendations for best practices in API design, helping developers to optimize the structure, security, and performance of their APIs.

- Enhanced Testing: AI can be used to simulate various scenarios and test API responses more effectively, identifying potential issues and optimizing the API’s reliability and performance before deployment.

- API Documentation: AI can assist in generating comprehensive API documentation based on the designed API, ensuring that the documentation is consistent, up-to-date, and aligned with the API’s actual behavior.

- Design Optimization: AI tools can suggest improvements in API design by analyzing usage patterns, helping developers to create more efficient and user-friendly APIs.

Considerations

These AI-driven features help TYK users to accelerate the API development process, improve design quality, and reduce errors, ultimately leading to more robust and scalable APIs.

Pricing

TYK offers customized pricing plans, including a free option, with paid plans starting at $450 per month.

Relevant Links

Conclusion

When selecting an LLM Gateway, your choice should align with your specific needs and the scale of your operations.

If you're a startup experimenting with LLM integrations and need a quick and cost-effective solution, starting with the free tiers of tools like BiFrost AI or Portkey AI Gateway or LiteLLM can provide a solid foundation. These platforms are well-supported by active communities and offer a straightforward path to managing LLM interactions.

As you move into production and require more robust features, such as enhanced security and local deployment options, platforms like BiFrost AI or Portkey or Gloo Gateway are ideal. These solutions are well-suited for scaling businesses that need to manage LLMs without sending data outside their environment.

For large enterprises with high LLM integration volumes, reliability and comprehensive management features become critical. In this case, solutions like Kong AI Gateway or AI Gateway by GitLab offer the necessary robustness and enterprise-grade support.

Finally, for organizations focusing on advanced LLM features like prompt improvement and fine-tuning, specialized tools like LM Studio or Aisera’s LLM Gateway offer sophisticated options to enhance your AI-driven projects.

Ready to cut the alert noise in 5 minutes?

Install our free slack app for AI investigation that reduce alert noise - ship with fewer 2 AM pings

Frequently Asked Questions

Everything you need to know about observability pipelines