Introduction to List of Top 14 LLM Frameworks

Large Language Models (LLMs) have rapidly emerged as transformative tools in the world of artificial intelligence, capable of understanding, generating, and interacting with human-like text. These models are being employed across a myriad of applications, from simple tasks like answering questions and summarizing articles to more complex ones like generating detailed reports or engaging in dynamic conversations.

According to Forrester, leading firms are accelerating their automation efforts fueled by a new wave of AI enthusiasm, with LLMs playing a pivotal role in this transformation.

However, the true potential of LLMs is unlocked when they are integrated within specialized frameworks. These frameworks provide the infrastructure and tools necessary to harness the power of LLMs effectively, allowing developers to build sophisticated, AI-driven applications. By using these frameworks, one can manage the complexities of deploying large models, connecting different AI tools, and optimizing performance, thereby making AI solutions more accessible, scalable, and efficient.

The primary purpose of these LLM frameworks is to facilitate the smooth operation of various AI-driven tasks, which might include anything from retrieving information and fine-tuning models to performing a series of actions seamlessly. In essence, these frameworks are the backbone of modern AI applications, enabling more intelligent, cohesive, and efficient operations.

As the demand for AI-driven solutions continues to grow, so does the importance of selecting the right LLM framework. The right choice can significantly influence the efficiency, scalability, and overall success of your AI projects. Therefore, understanding the features and capabilities of various LLM frameworks is crucial for developers, data scientists, and organizations looking to leverage AI in their operations.

Key Features to Consider When Choosing an LLM Framework

When selecting a framework to work with Large Language Models (LLMs), it's important to consider several key features that can significantly impact the effectiveness and efficiency of your AI projects. These features ensure that the framework not only meets your current needs but also provides the flexibility and scalability to grow with your future requirements.

1. Model Support

One of the most critical aspects of any LLM framework is its support for various models. This includes:

- Availability of Pre-Trained Models: A robust framework should offer a wide range of pre-trained models that can be immediately leveraged for various tasks, saving significant time and resources.

- Fine-Tuning Capabilities: The ability to fine-tune models on your own datasets is essential for customizing the model to specific use cases, ensuring higher accuracy and relevance.

- Model Variety: Diverse model support allows for greater flexibility in choosing the right tool for different tasks, whether it’s for natural language understanding, generation, or specific domain applications.

2. Ease of Use

An ideal LLM framework should be user-friendly, enabling both beginners and experts to deploy and manage models with ease. This includes intuitive interfaces, comprehensive documentation, and the availability of tutorials or guides. The framework should simplify complex processes like model deployment, fine-tuning, and integration with other tools, ensuring that users can focus on building and improving their AI applications rather than struggling with technical hurdles.

3. Scalability

Scalability is a crucial feature, especially for organizations that deal with large datasets or require high computational power. A scalable framework should offer:

- Support for Distributed Training: The ability to train models across multiple GPUs or nodes is essential for handling large models and datasets efficiently.

- Multi-Model Support: Managing and deploying multiple models simultaneously should be seamless, allowing for a more dynamic and robust AI environment.

4. Framework Compatibility

The framework's ability to integrate with popular machine learning frameworks (such as TensorFlow, PyTorch, or Keras) is vital for ensuring that your workflow remains smooth and that you can leverage existing tools and libraries. Compatibility with these frameworks allows for easier model training, evaluation, and deployment, enhancing the overall flexibility of your AI projects.

5. API Access

Having well-documented and accessible APIs is essential for integrating the LLM framework into your existing systems and workflows. APIs enable automation, customization, and extension of the framework’s capabilities, making it easier to build complex applications that require interaction with other software or platforms.

6. Cross-Platform Support

In today’s diverse tech landscape, cross-platform support is a necessity. The ability to deploy models across different environments—be it cloud, on-premises, or edge devices—ensures that your AI applications can reach a broader audience and adapt to various operational requirements.

7. Performance Monitoring

Effective performance monitoring tools are essential for tracking and optimizing model performance in real time. Key metrics like latency, throughput, and error rates should be easily accessible, allowing for quick adjustments and improvements. Additionally, logging and auditing capabilities are crucial for troubleshooting and maintaining model reliability.

8. Model Explainability

As AI models become more complex, understanding and interpreting their predictions is increasingly important. Features that provide model explainability help users to trust the model's outputs and ensure transparency. This includes tools for interpreting predictions, detecting biases, and understanding how different inputs affect the model’s decisions.

9. Security

Data privacy and access control are paramount, especially when working with sensitive information. The chosen framework should offer robust security features, including encryption, role-based access controls, and compliance with data protection regulations. Ensuring that your AI applications are secure protects both your data and the integrity of your models.

10. Cost Efficiency

Cost efficiency is a key consideration, particularly for organizations with budget constraints. A framework’s pricing model should align with your financial resources while offering the necessary features. This might include flexible pricing options, such as pay-as-you-go models or subscription plans, that allow you to scale usage according to your needs.

11. Community and Ecosystem

A strong community and ecosystem can greatly enhance the value of an LLM framework. Active community support, along with a rich ecosystem of plugins, third-party tools, and integrations, can accelerate development and problem-solving. Moreover, seamless integration with other tools and services in your tech stack—such as data management tools, CI/CD pipelines, and MLOps platforms—ensures that your AI projects can be efficiently managed and scaled.

List of Top 14 LLM Frameworks

- Haystack

- Hugging Face Transformers

- Llama Index

- Langchain

- Vellum AI

- Flowise AI

- Galileo

- AutoChain

- vLLM

- OpenLLM

- Autogpt

- Langdock

- NeMO (Nvidia)

- Microsoft Guidance

Tools

Haystack

Haystack is an open-source framework designed to build AI applications, especially those involving large language models (LLMs).

Benefits

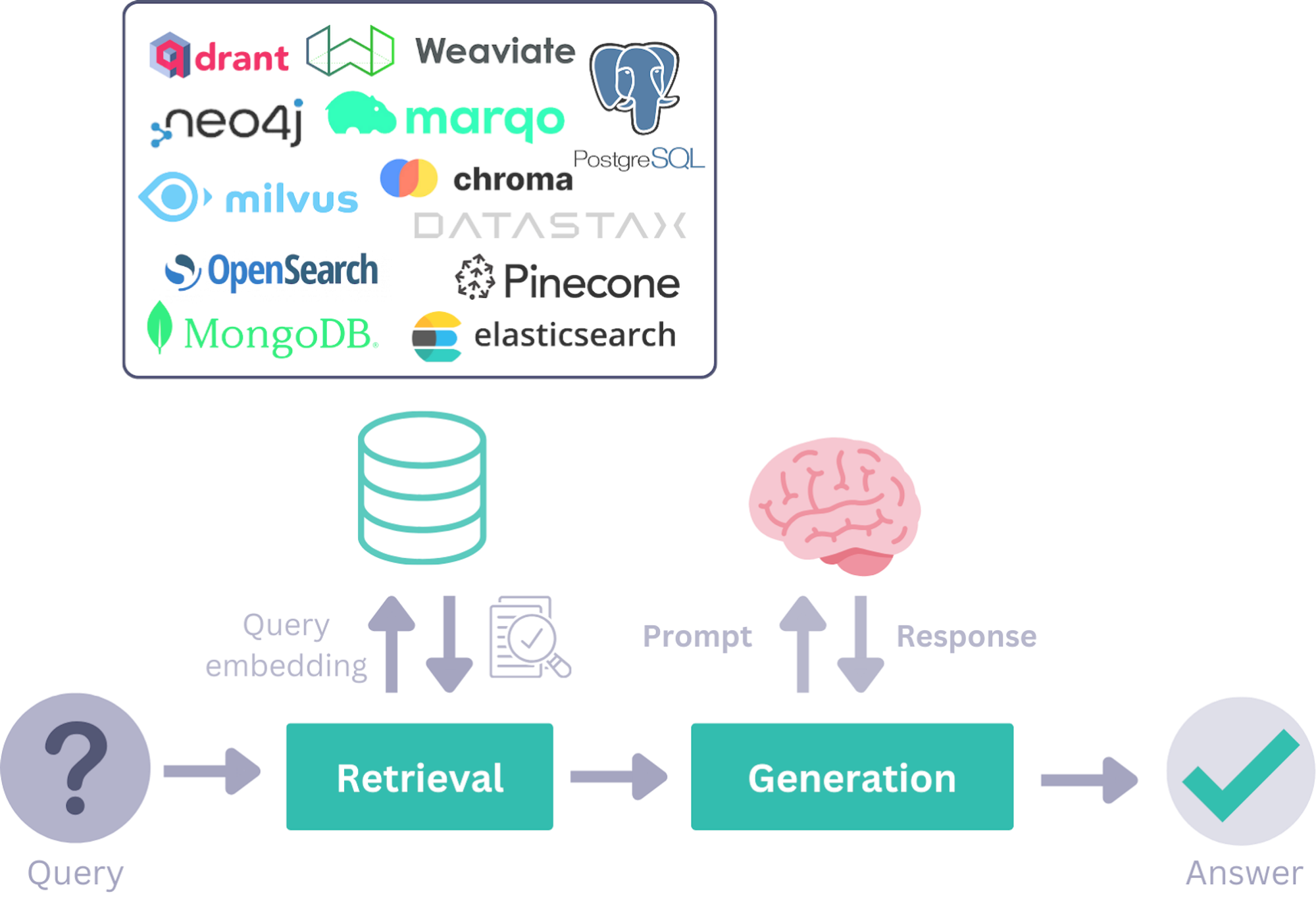

- It excels in creating advanced search systems, retrieval-augmented generation (RAG) pipelines, and other NLP tasks.

- Haystack’s modular design allows for easy customization and integration with popular AI tools like Hugging Face and OpenAI.

Considerations

- A steeper learning curve for beginners as it heavily relies on Elasticsearch, which can cause some performance issues.

Pricing

Haystack is an open-source framework.

Relevant Links

Documentation: https://docs.haystack.deepset.ai/docs

GitHub: https://github.com/deepset-ai/haystack/

Integrations: https://haystack.deepset.ai/integrations

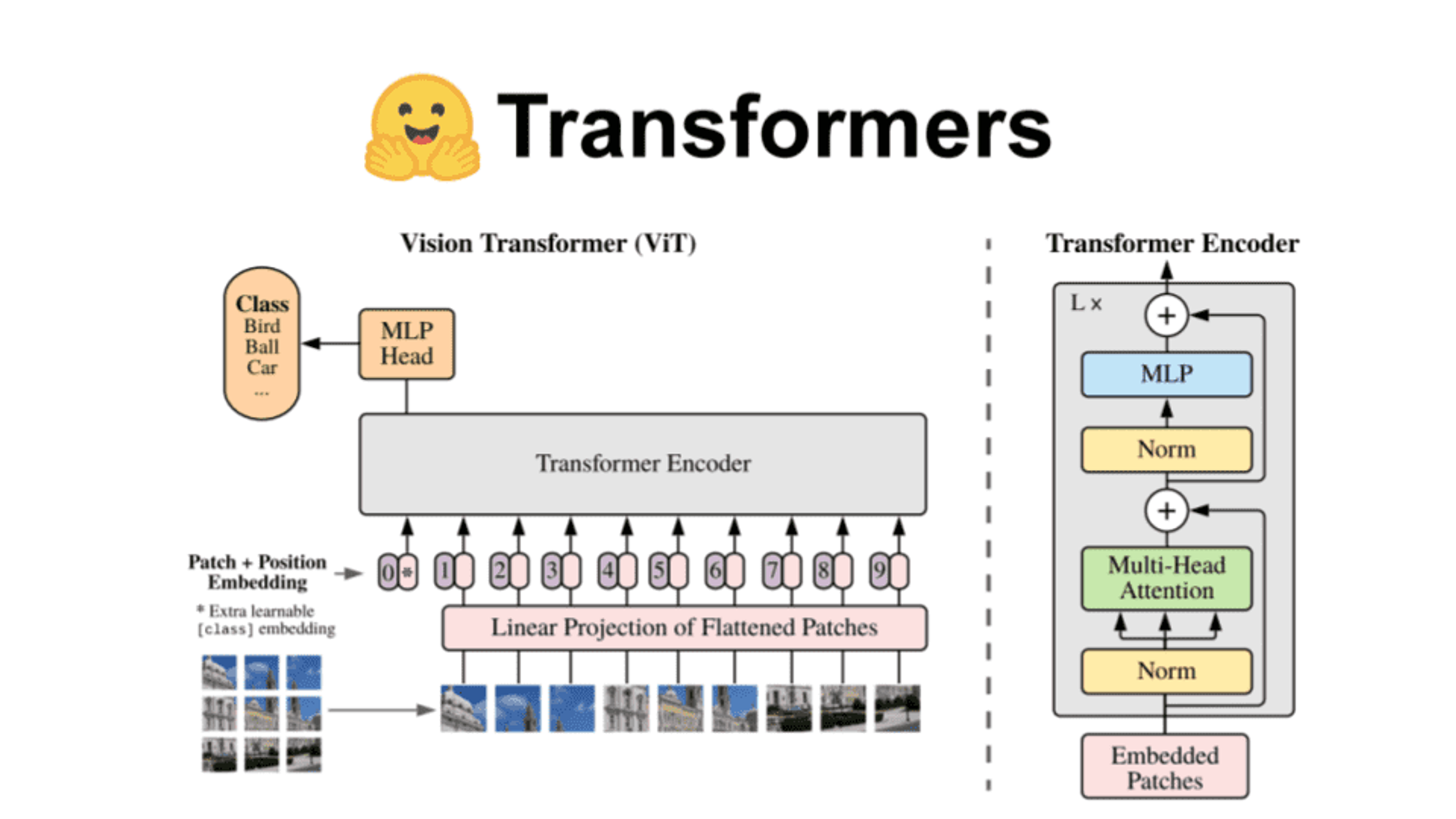

Hugging Face Transformers

Hugging Face Transformers is an open-source library that provides easy access to state-of-the-art natural language processing (NLP) models, including BERT, GPT, T5, and more. It simplifies the process of leveraging these models for tasks like text classification, summarization, translation, and conversational AI.

Benefits

- Access to a vast array of pre-trained models covering a wide range of NLP tasks, all under a unified interface.

- Intuitive API and extensive documentation make it accessible to both beginners and advanced users.

- Supported by a large, active community that contributes to a rapidly growing ecosystem of models, tools, and tutorials.

- Compatible with TensorFlow and PyTorch, allowing users to choose their preferred deep learning framework.

Considerations

- Running large models, especially in production, can be resource-intensive, requiring significant computational power and memory.

- While pre-trained models are easy to use, fine-tuning them on custom datasets can be complex and requires a good understanding of machine learning.

- Some models have specific licenses that may limit their use in commercial applications, so it is essential to review these before deployment.

Pricing

Hugging Face offers a range of pricing plans, including a free tier with limited access, and paid plans that start at $9 per month, providing additional features like enhanced API access, training capabilities, and higher usage limits for more advanced needs.

Relevant Links

Docs: https://huggingface.co/docs/transformers/en/index

GitHub: https://github.com/huggingface/transformers

Community: https://huggingface.co/docs/transformers/en/community

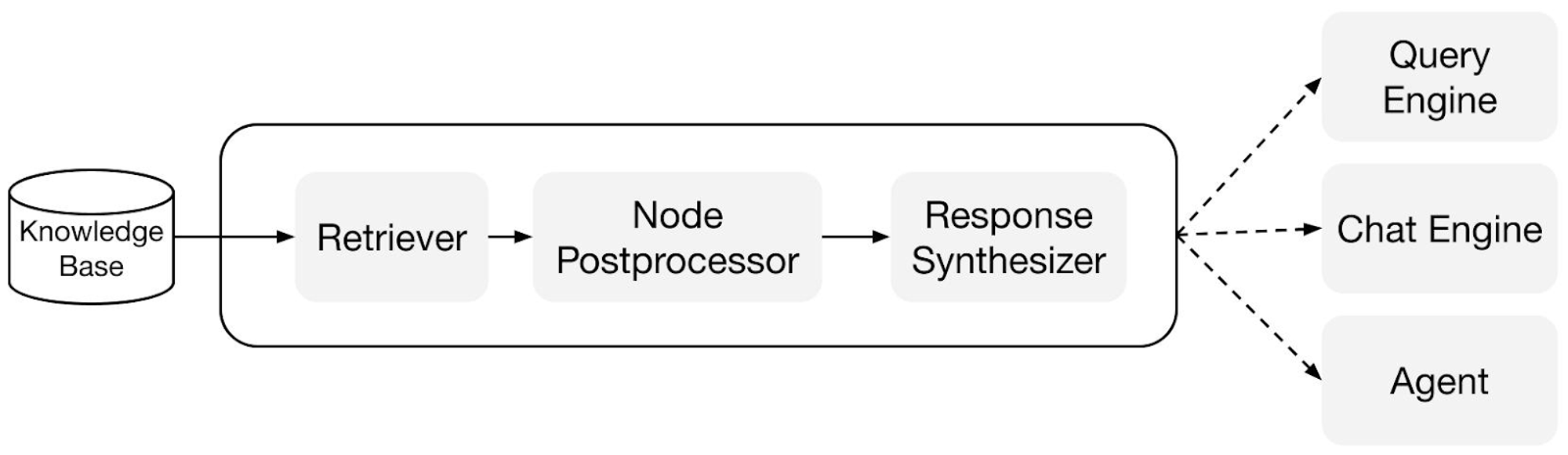

LlamaIndex

LlamaIndex is a framework for building context-augmented generative AI applications with LLMs, including agents and workflows.

Benefits

- LlamaIndex effectively manages the chunking process for large text inputs.

- It supports sophisticated retrieval techniques, such as breaking down a question into sub-questions or conducting multiple queries against the LLM, with the ability to self-evaluate and iterate if the initial output isn't optimal.

- LlamaIndex provides built-in embedding retrieval, which is especially useful if your vector store doesn't natively support this feature.

- It allows for the option to stream responses from the LLM in real-time, giving a more interactive experience similar to ChatGPT's web version, where text appears as it's being typed.

- The framework supports shorter, more efficient implementations, streamlining the overall development process.

Considerations

- LlamaIndex’s documentation is limited, with most content focused on examples. If your use case isn’t covered, you may need to dig into the source code, as I had to when implementing metadata filters.

Pricing

LlamaIndex pricing is based on the computational costs of running models, data processing, and storage, allowing for flexible cost management depending on the complexity and scale of your AI application.

Relevant Links

Docs: https://ts.llamaindex.ai/

GitHub: https://github.com/run-llama/LlamaIndexTS

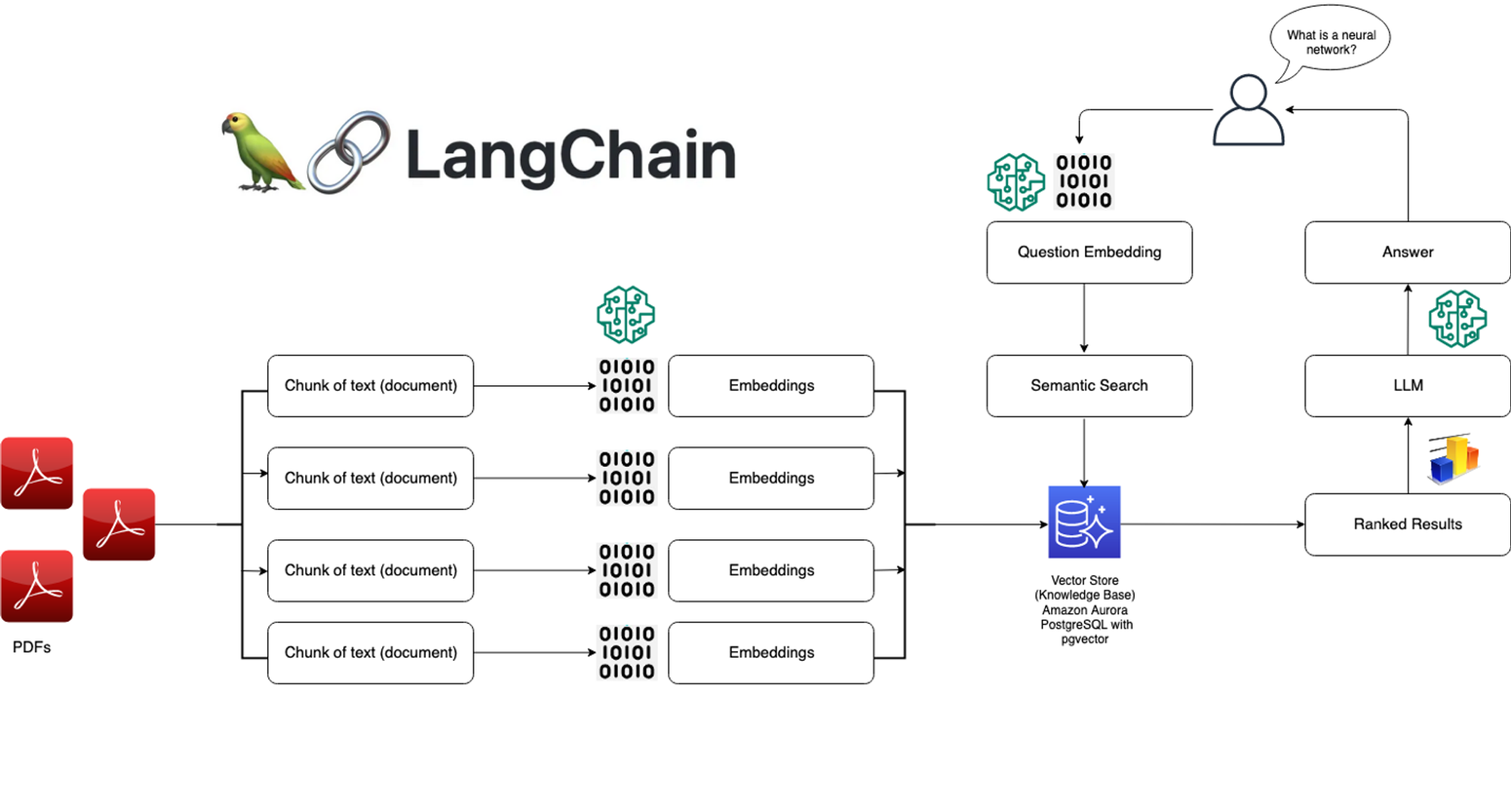

Langchain

LangChain is a framework designed to streamline the development of applications that utilize large language models (LLMs). It excels in integrating LLMs into various use cases, such as document analysis, summarization, chatbots, and code analysis, offering a unified platform for tasks commonly associated with language models.

Benefits

- It reduces the complexity of building LLM applications by providing tools that streamline the integration and management of large language models.

- LangChain’s design allows easy swapping of components, enabling quick customization and scalability as project needs change.

- With strong community support and extensive integrations, LangChain offers access to a broad range of tools and resources, enhancing its functionality from data retrieval to workflow automation.

Considerations

- LangChain's documentation lacks depth and clarity.

- While flexible, the modular design can introduce performance overhead, potentially slowing applications compared to more streamlined, purpose-built frameworks.

Pricing

LangChain offers a range of pricing plans, starting with a free tier and scaling up based on usage, providing options suitable for individual developers to enterprise-level needs.

Relevant Links

Python LangChain: https://python.langchain.com/v0.2/docs/introduction/

Java LangChain: https://js.langchain.com/v0.2/docs/tutorials/

GitHub: https://github.com/langchain-ai**

Community: https://www.langchain.com/join-community

Integrations: https://python.langchain.com/v0.2/docs/integrations/platforms/

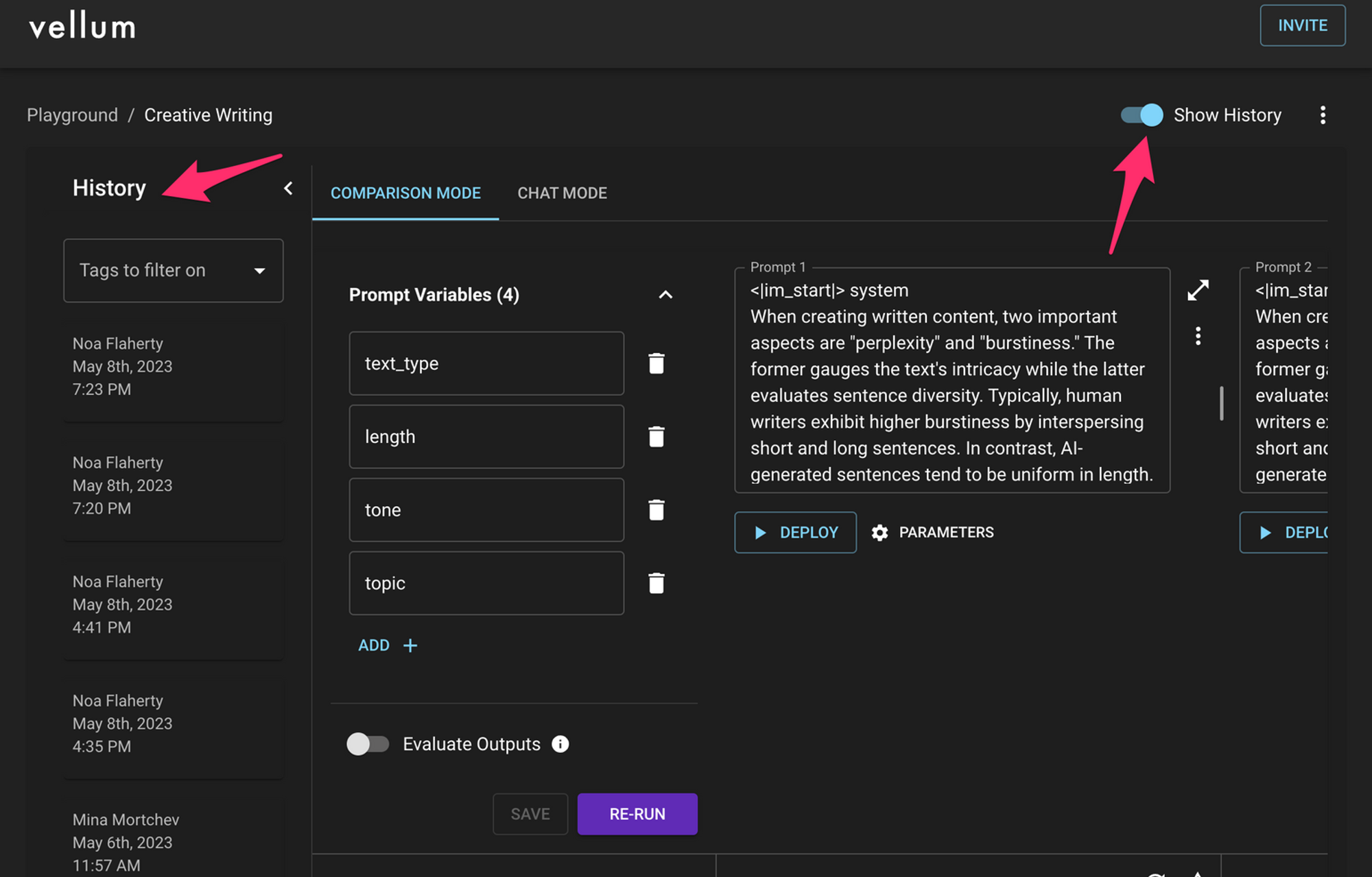

Vellum AI

Vellum AI is a platform designed to streamline the deployment, monitoring, and management of large language models (LLMs) in production environments. It provides tools for fine-tuning, scaling, and optimizing models, helping businesses efficiently integrate AI into their operations. Vellum AI is particularly focused on simplifying the process of using LLMs in real-world applications, ensuring performance and reliability.

Benefits

- A user-friendly interface that simplifies model deployment and management.

- The platform enables fast integration of AI models into production environments.

- It offers comprehensive tools for real-time performance tracking and optimization.

- The platform easily scales with growing data and usage needs.

Considerations

- Although user-friendly, Vellum AI might still have a learning curve for those unfamiliar with AI deployment and management tools.

- The pricing may be steep for smaller businesses, with advanced features often available only in higher-tier plans.

Pricing

Vellum AI offers a flexible pricing model with customized plans based on usage and specific needs, catering to different business sizes and requirements.

Relevant Links

Docs: https://docs.vellum.ai/?_gl=1*q52c5a*_gcl_au*MTU2MzM3NDU5Ni4xNzI1MjkwNTA4**

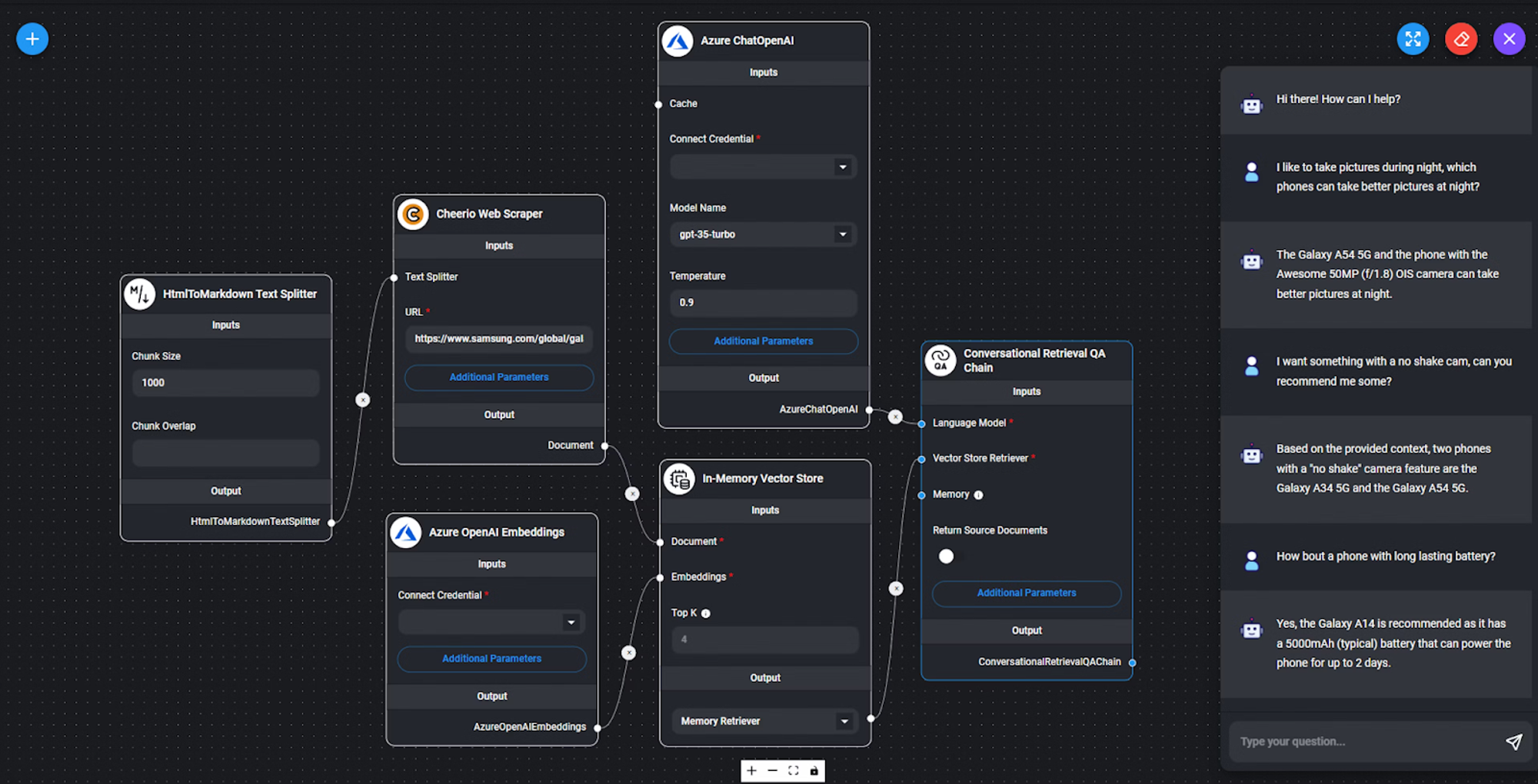

Flowise AI

Flowise is an open-source platform that simplifies the creation and management of AI applications by providing an easy-to-use interface for connecting large language models (LLMs) with various data sources.

Benefits

- Drag & Drop Interface

- 3rd Party Platform Integration

- Accelerated Development with No/Low-Code Interface

Considerations

- Users unfamiliar with Node.js or TypeScript may find the learning curve somewhat challenging.

- The interface might feel overwhelming for new users due to the abundance of available options.

Pricing

FlowiseAI offers a free tier and paid plans starting at $10 per month, providing scalable options to suit different needs and budgets.

Relevant Links

Docs: https://docs.flowiseai.com/**

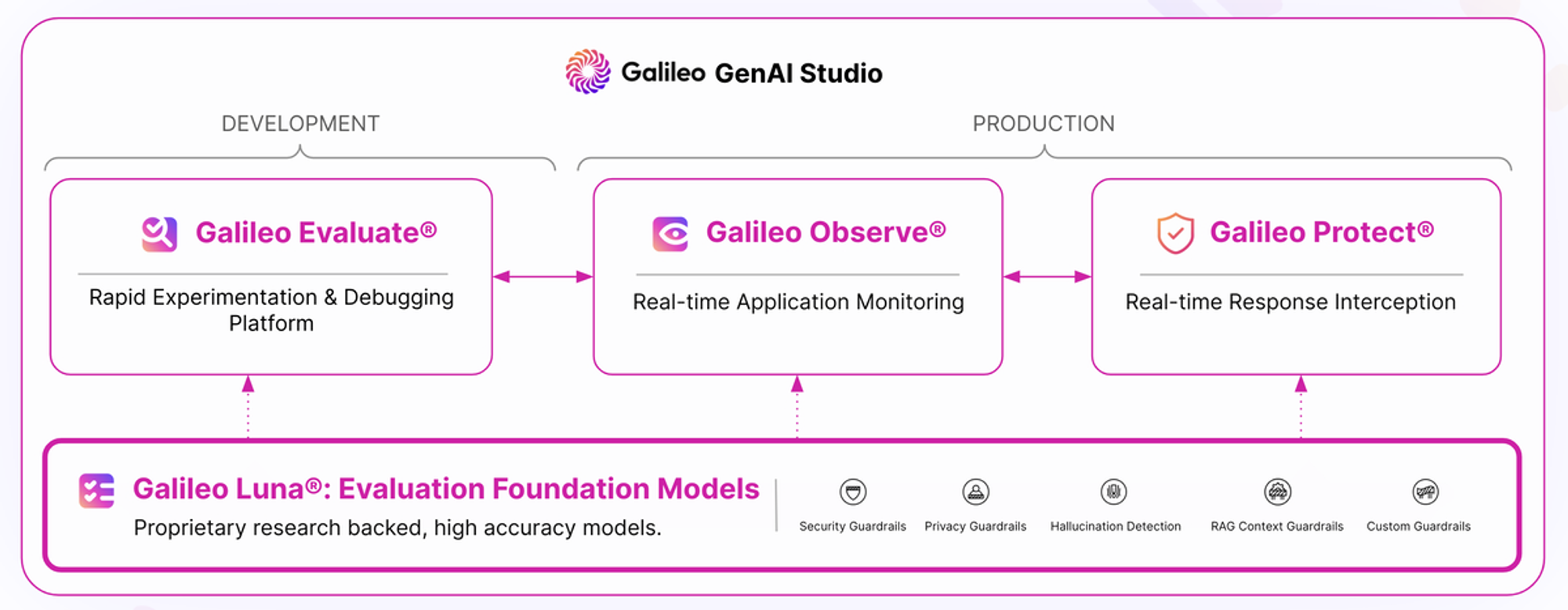

Galileo

Galileo is a platform designed to help machine learning teams build, debug, and optimize their datasets for AI model development.

Benefits

- Galileo offers robust tools for identifying and fixing issues within datasets, helping to improve model accuracy and performance.

- The platform provides in-depth insights into datasets, including the detection of data drift and mislabeling, which are crucial for maintaining high data quality.

- It supports effective collaboration between team members, allowing for more streamlined and coordinated efforts in data management and model development.

Considerations

- Finding specific platform features and capabilities can be difficult, often requiring direct contact with the organization for clarification.

- The platform would be more usable if it could upload sample designs or reference URLs.

- More built-in report types are needed to address emerging challenges, which would greatly aid in data optimization and accuracy efforts.

Pricing

Galileo offers enterprise pricing plans tailored to each customer’s needs and scale.

Relevant Links

AutoChain

AutoChain is a platform developed by Forethought that automates the creation and management of AI-driven workflows. It enables businesses to design, deploy, and scale complex AI applications with ease, leveraging powerful tools for data processing, natural language understanding, and decision-making. AutoChain is designed to streamline AI operations, allowing users to build intelligent systems that can interact with data, automate tasks, and enhance decision-making processes across various business functions.

Benefits

- AutoChain offers a streamlined and adaptable framework for building generative agents.

- The platform supports the integration of various custom tools and accommodates OpenAI function calling, enhancing the agent's capabilities and flexibility.

- AutoChain provides simple memory tracking for conversation history and tool outputs, ensuring that interactions remain coherent and contextually relevant.

- It features automated multi-turn conversation evaluations using simulated interactions.

Pricing

There is no estimate or indication of the pricing by Forethought that is publicly available.

Relevant Links

Doc: https://autochain.forethought.ai/

GitHub: https://github.com/Forethought-Technologies/AutoChain

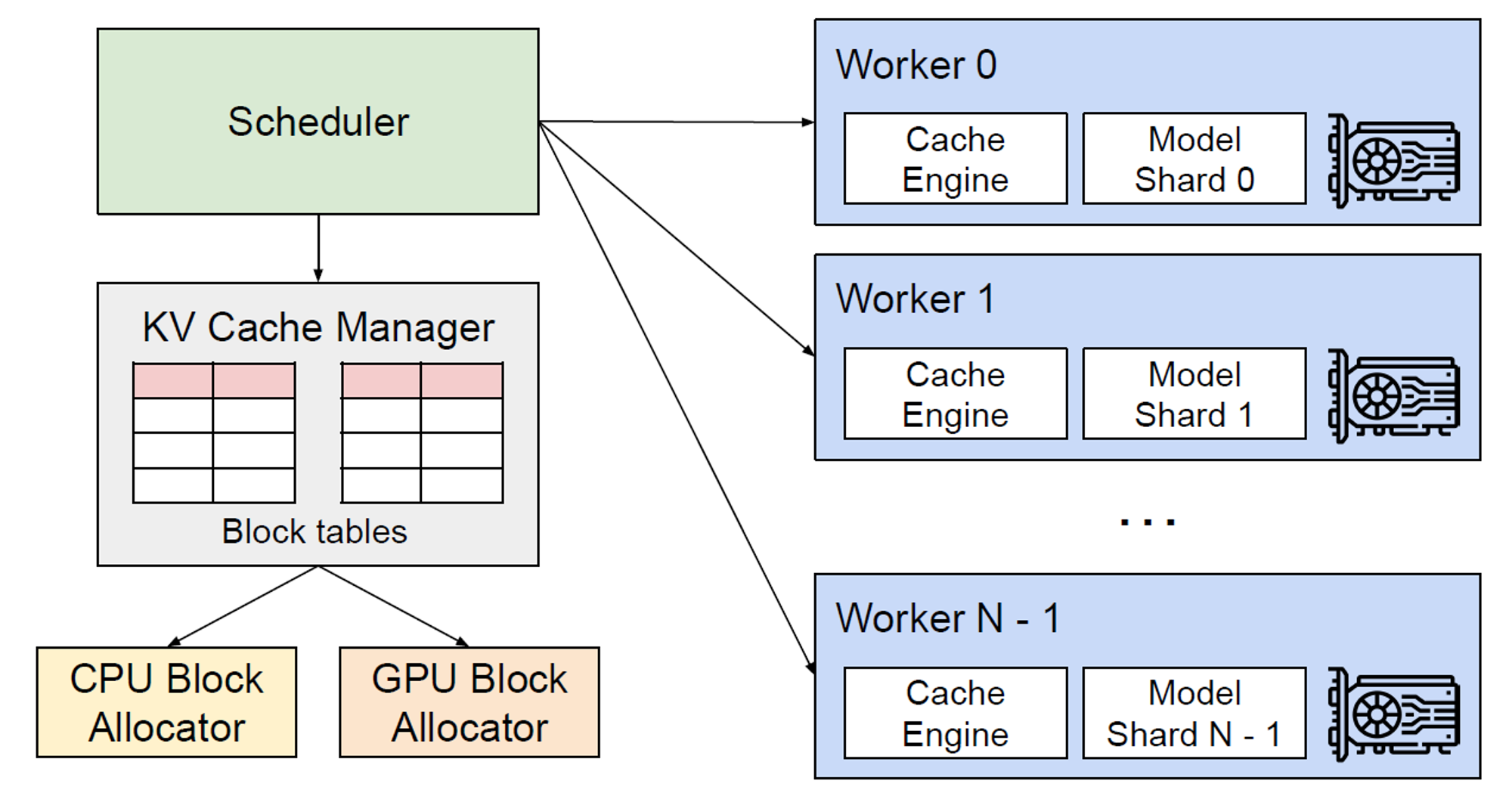

vLLM

vLLM was initially introduced in a paper titled "[Efficient Memory Management for Large Language Model Serving with PagedAttention](https://arxiv.org/abs/2309.06180)," authored by Kwon et al. vLLM, short for Virtual Large Language Model, is an active open-source library designed to efficiently support inference and model serving for large language models (LLMs).

Benefits

- vLLM efficiently manages the KV cache and handles various decoding algorithms.

Considerations

- vLLM requires significant customization to fit specific needs

- In certain scenarios, the overhead associated with managing memory can reduce the anticipated performance gains.

Pricing

There is no estimate or indication of the pricing by vLLM that is publicly available. However, it states that it is a cheap LLM serving everyone.

Relevant Links

Documentation: https://docs.vllm.ai/en/stable/#documentation

GitHub: https://github.com/vllm-project/vllm

Community: https://docs.vllm.ai/en/latest/community/meetups.html

OpenLLM

OpenLLM is a robust platform that enables developers to harness the capabilities of open-source large language models (LLMs). It serves as a foundational tool that easily integrates with other powerful technologies, including OpenAI-compatible endpoints, LlamaIndex, LangChain, and Transformers Agents.

Benefits

- OpenLLM seamlessly integrates with various powerful tools like OpenAI-compatible endpoints, LlamaIndex, LangChain, and Transformers Agents, allowing for versatile AI application development.

- It empowers you to utilize and customize open-source large language models, providing greater control and flexibility than proprietary solutions.

- OpenLLM is designed to be scalable, making it suitable for projects of different sizes, from small applications to large-scale deployments, with the ability to extend its capabilities as needed.

Relevant Links

Autogpt

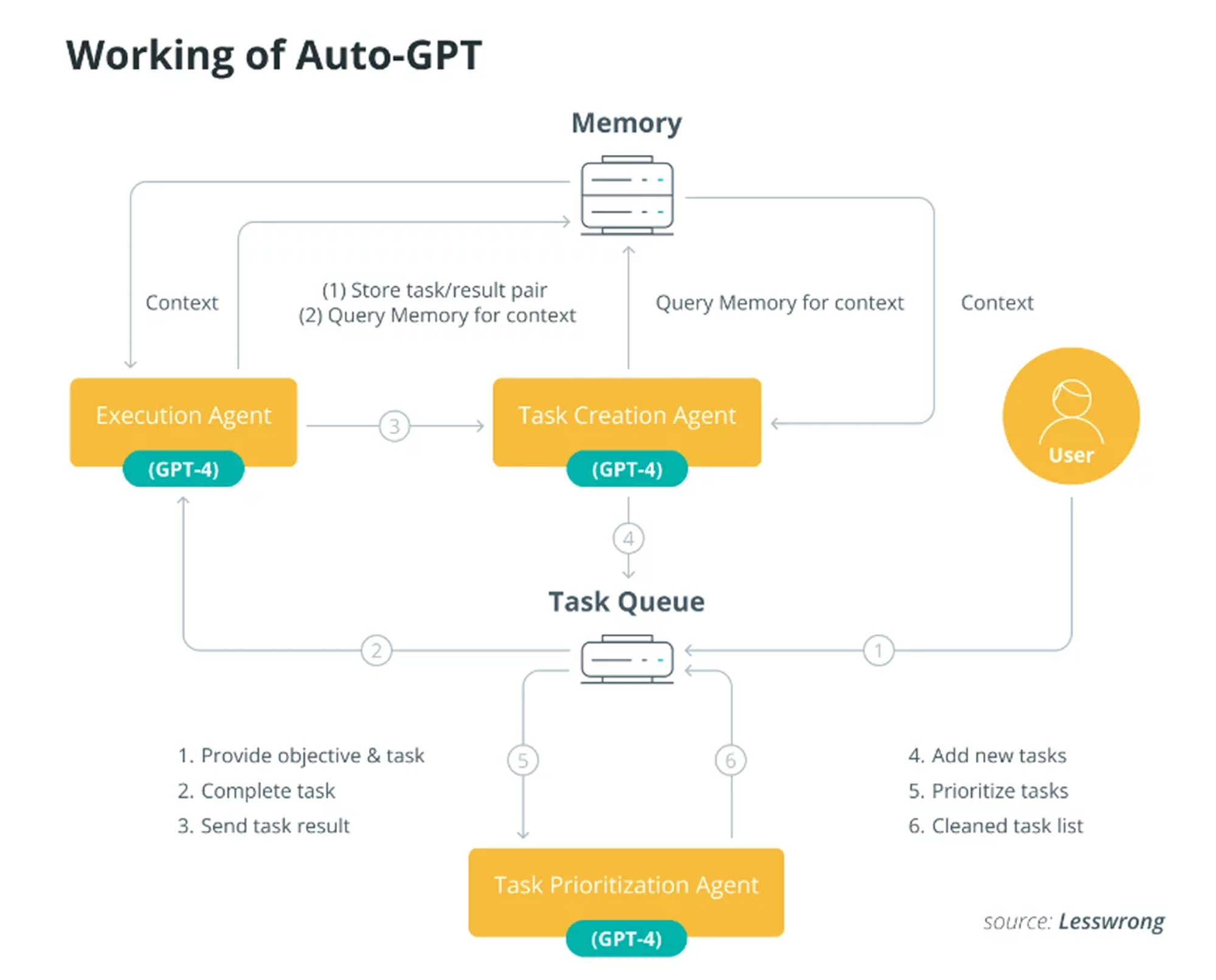

Auto-GPT is an advanced AI tool that builds on the capabilities of OpenAI’s GPT-4 model, allowing for autonomous task execution without requiring constant user input. Unlike ChatGPT, which operates in a conversational mode and requires user prompts for each interaction, Auto-GPT can independently plan, execute, and iterate on tasks.

Benefits

- It is helpful for complex, multi-step processes where automation is beneficial, such as content creation, business automation, or research.

- Auto-GPT can chain together multiple steps to achieve a goal, modify its approach based on intermediate results, and even interact with external tools and APIs to gather information or perform actions.

Considerations

- Auto-GPT also has no long-term memory.

- Expensive

- Technical Issues

Pricing

AutoGPT offers Basic, Pro, and Advanced plans starting at $19 monthly or $190 annually, with a free plan that includes 30 message credits and one chatbot.

Relevant Links

Langdock

Langdock enables developers to easily deploy, manage, and scale LLMs across different environments. Langdock focuses on providing a seamless experience for building AI-powered applications by simplifying the complexities associated with model deployment, monitoring, and optimization. This makes it easier for businesses and developers to leverage the power of LLMs in their projects without needing extensive infrastructure or expertise.

Benefits

- Langdock ensures GDPR compliance and adheres to other data protection regulations by hosting servers and models within the EU.

- The platform is highly customizable.

- It is designed to support multi-user collaboration.

Considerations

- Although an API is available, users might encounter limitations until the full range of API features, including Assistants and Workflows, is fully released.

- Additionally, some features, such as GPT-4 Turbo, are initially restricted to specific regions, which could limit global accessibility.

Pricing

Langdock still needs to provide pricing information for this product or service.

Relevant Links

Documentation: https://docs.langdock.com/api

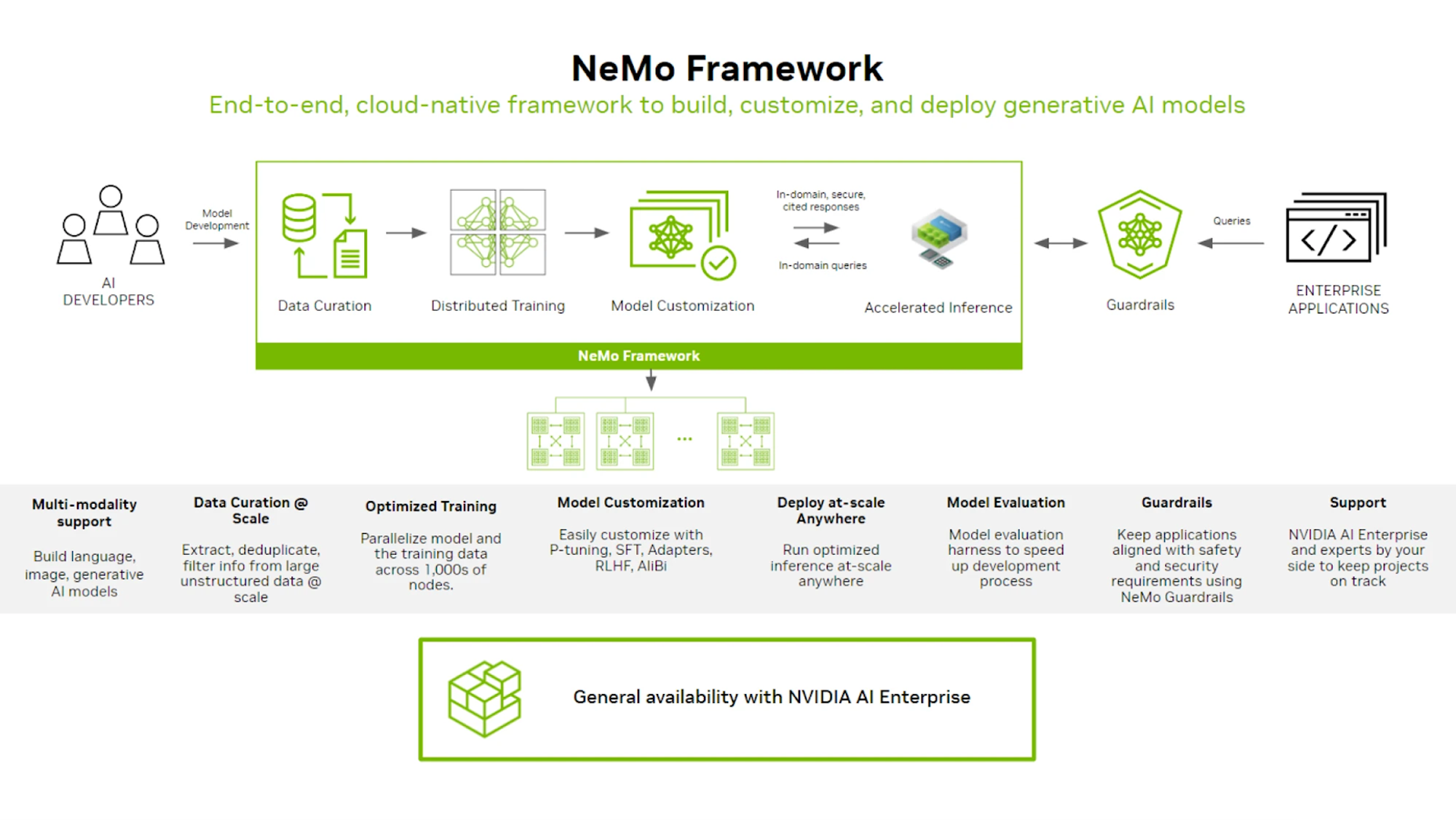

NeMO (Nvidia)

NVIDIA NeMo is a comprehensive platform designed for developing custom generative AI models, including large language models (LLMs), as well as multimodal, vision, and speech AI applications across various environments. It enables the delivery of enterprise-ready models through advanced data curation, state-of-the-art customization, retrieval-augmented generation (RAG), and enhanced performance. NeMo is part of the NVIDIA AI Foundry, a broader platform and service focused on creating custom generative AI models utilizing enterprise data and domain-specific expertise.

Benefits

- NeMo leverages NVIDIA’s powerful hardware and software stack to deliver accelerated AI performance, reducing training and inference times significantly.

- NeMo supports AI development across various environments, making it versatile and adaptable for different deployment scenarios, whether on-premises, in the cloud, or at the edge.

Considerations

- There is still scope for improvement in the pre-trained model as it still does not correctly identify the words/pronunciation. It's sometimes hit-and-miss.

- Also, it requires substantial memory to run the models.

Pricing

NeMo has not provided pricing information for this product or service.

Relevant Links

Microsoft Guidance

Microsoft Guidance is a library designed to give developers more control over large language models (LLMs) by enabling them to manage the model's behavior and outputs more precisely.

Benefits

- It allows developers to create more complex and structured prompts, integrate external data sources, and guide the model’s responses according to specific rules or logic.

- Guidance is particularly useful for scenarios where more deterministic and reliable outputs are needed, such as in enterprise applications, where consistency and accuracy are critical.

Considerations

- Integration options are more limited compared to other frameworks

- The documentation for Microsoft Guidance is not as comprehensive, which can make troubleshooting and learning more difficult.

Relevant Links

Conclusion

Selecting the right LLM framework is crucial for successfully leveraging the power of large language models in your AI projects. Each of the top 14 frameworks we explored—ranging from the robust and versatile platforms like Hugging Face Transformers and LangChain to specialized tools like LlamaIndex and NVIDIA NeMo—offers unique benefits and considerations.

The choice of framework depends largely on your specific needs, such as ease of use, scalability, integration capabilities, and the particular AI tasks you aim to accomplish.

As the AI landscape continues to evolve, these frameworks will play a critical role in shaping how developers and organizations deploy, manage, and scale AI solutions. By carefully evaluating the features and limitations of each framework, you can ensure that your AI initiatives are not only powerful but also efficient, scalable, and aligned with your long-term goals.

Whether you are building complex AI workflows, enhancing existing applications, or exploring new AI-driven possibilities, choosing the right LLM framework will be key to unlocking the full potential of your AI endeavors.

Ready to cut the alert noise in 5 minutes?

Install our free slack app for AI investigation that reduce alert noise - ship with fewer 2 AM pings

Frequently Asked Questions

Everything you need to know about observability pipelines