Missing from this list: an AI that actually fixes the issue →

Connect your tools and ask AI to solve it for you

What's an LLM Observability tool?

Observability refers to the ability to measure the internal state of a system based on the data it generates. This includes capturing metrics, logs and traces. While it is quite popular for engineering teams in terms of measuring their infrastructure & services, a new category has recently been created within it it due to the popularisation of LLMs. This specifically focuses on tools and packages that help measuring the cost, quality & security of LLM usage within organisations.

Fun Fact: As per the State of CI / CD report 2024 by CD Foundation, AI-assisted coding tools is the 5th most popular category of tools adopted by engineers, at par with IDEs!.

Why is LLM Observability becoming critical?

User experiences are evolving into more intuitive & personalised as the usage of LLMs is picking up pace across companies. Large corporations like Google, Meta were already offering these experiences in several of their customer & business products but with ChatGPT’s launch in 2022, more companies now have the tools to build these. Since then, the number of available LLMs, both closed and open source, have increased exponentially.

While the architecture around LLM usage still needs the conventional observability setup, since the LLM is mostly a separately deployed entity outside your code and accessed in a prompt + response model, it creates a need of qualitative observability. Every response, before being used, needs to be checked for hygiene and relevance. That means you need to set up logging of your LLM prompts & responses and then contextual analysis on top of them that fulfills your monitoring purpose.

What are LLM Observability tools supposed to do?

- Help track & improve response qualityRelevant to query and are fact basedDon’t have biases for any user groups or type of dataImproving the experience to justify being usedUse the additional context (passed as a vector dataset) optimally

- Help track & improve response reliabilityLatency is acceptableQuality and format remains consistentMaintain user level data silos

- Build visibility of the cost of using the LLM, especially when using a third party managed model

Architecture of LLM Observability tools

Typically, the instrumentation for LLM Observability is done in 2 ways:

- Tracing - Trace the calls you make (with or without an agent) and create spans for request, response data to create metrics and do quality analysis. This is useful for cases where you would want to only look at what happened and don’t want any interference in your usage

2. Proxy - Replace the LLM calls to a proxy layer which intercepts your calls and make those calls on your behalf. These help in controlling some trivial parameters without you worrying about them but could lead to application downtime in case the proxy is down.

How do LLM Observability tools help engineering teams?

Looking at the data collected from your LLM usage, you can do multiple optimisations to improve either the cost, quality, user behaviour or security of your product:

1. Cost optimisation for usage:

- The tool would provide you input/output tokens volume for every request being sent to an LLM. This helps you look at the cost overhead of your request, both at an individual level or aggregate.

- Custom Tagging: In case you are looking for attributing cost to different entities, by adding tags for use-cases / accounts.

2. A/B Experimentation on Prompts / Prompt Engineering:

- Try out multiple prompt templates, keep them side by side and explore which prompt give best results and iterate on them. What would otherwise require extensive manual interpretation on a UI layer like chatGPT, will be done programmatically and tracked in the LLM Observability tool.

- Fun Fact: “Prompt engineering” as a keyword is less than 3 years old, yet has more than 2000 openings for “Prompt Engineer” just on Linkedin Job portal.

3. Evaluate the right LLM for your use-case: The below screenshot shows an output from empirical.run — an open source project shows the comparison between different models and their outputs for given prompts. Such comparisons are generally available within LLM Observability tools and help you accelerate your journey to identifying the best model for your use-case.

4. Improve RAGs: RAGs is Retrieval-Augmented Generation strategy that help leverage existing / private context to help the LLM answer questions better. An LLM Observability tool will also help you efficiently iterate over your context retrieval based queries, w.r.t prompts, chunking strategy and vector store format.

5. Fine-tuned model performances: Analyse how training the LLM with your data is affecting outputs and if there’s any significant different on the output quality between different versions of your model.

Top Tools to consider for LLM Observability

- Langsmith

- Langfuse

- Helicone

- Lunary

- Phoenix (by Arize)

- Portkey

- OpenLLMetry (by traceloop)

- Trulens

- Datadog

- Other tools evaluated

Tools

Langsmith

Langsmith is a product offering by Langchain, one of the fastest growing projects for LLM orchestration in the early days. It was launched in July 2023 and so far has over 100K users, one of the largest communities for a tool in the LLM space. They have also launched an LLM Observability platform, Langsmith.

Benefits

- In-built with Langchain: Its a tracing tool, comes in-built with Langchain. So, if you are a langchain user, no changes are needed it publishes traces for your LLM calls to its cloud.

- Feedback loop: It allows you to manually rate your responses or use an LLM to do so. It works with non-langchain agents also.

Considerations

- There is no self-hosting option within their self-serve module. They provide cost analysis and insights, but only for OpenAI usage currently.

Pricing

While Langchain is open source, we could not find any Github repo for Langsmith apart from it’s SDKs. It offers only a cloud Saas solution with a free-tier offering of 5K traces month per month. Self-hosting is available only as add-on in Enterprise plan.

Relevant Links

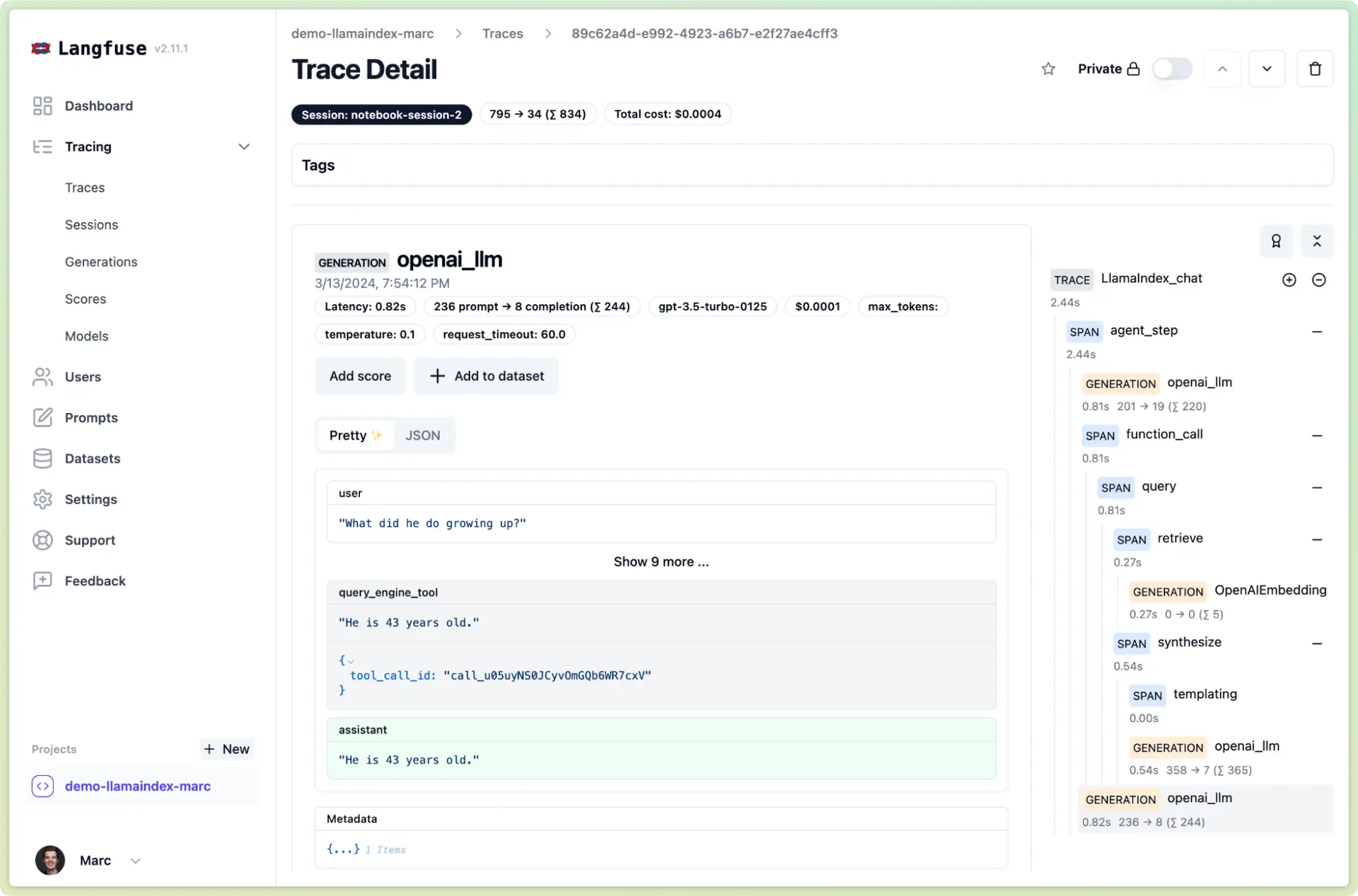

Langfuse

Langfuse is a leading observability tool for tracing, evaluation, logging and prompt management.

Benefits

Langfuse is one of the most popular open-source LLM Observability platform as per their public usage statistics.

- An active project on Github with community contributors

Pricing

Cloud platform with freemium plans -- read about them here: https://langfuse.com/pricing

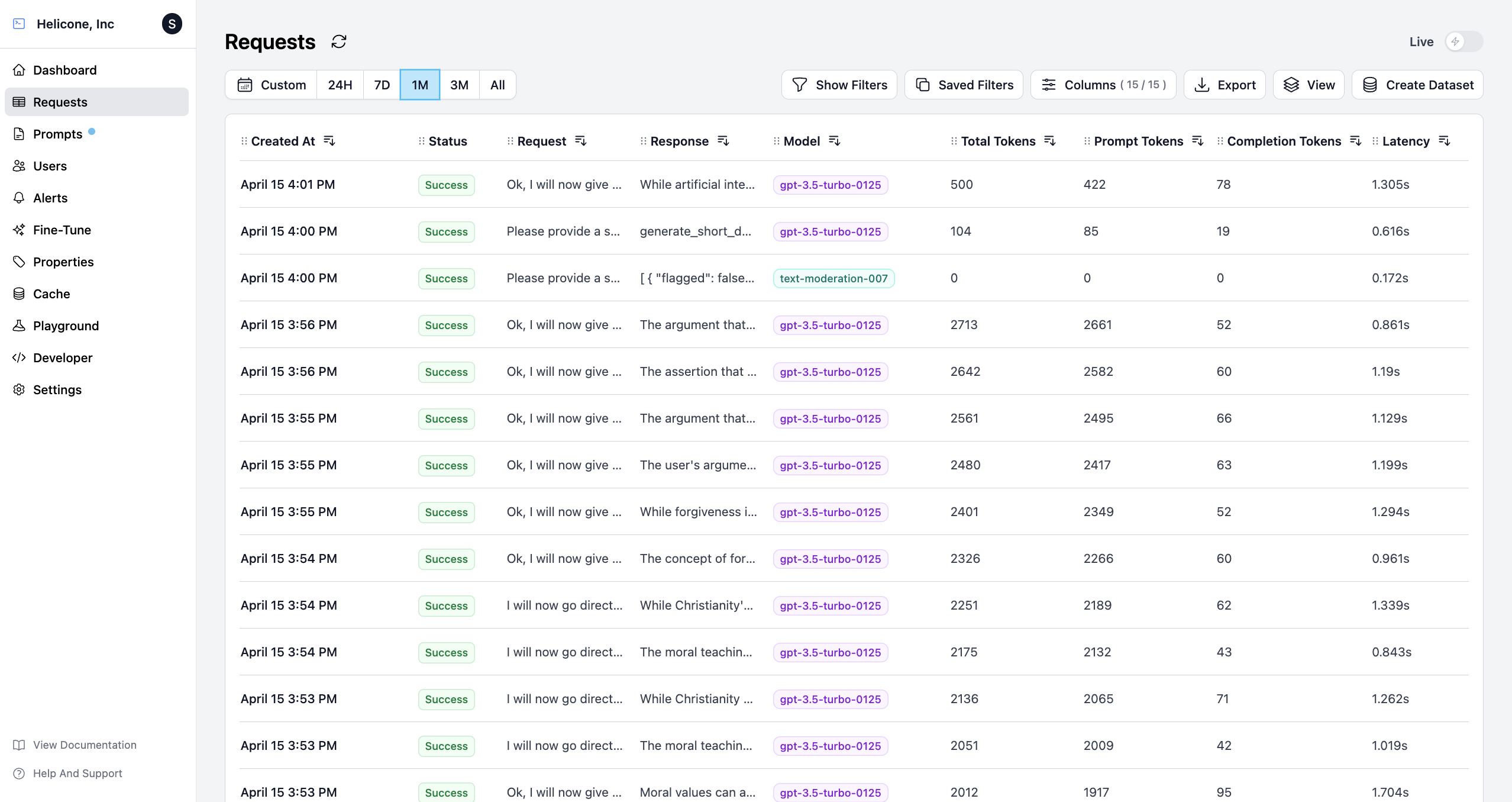

Helicone

Helicone is an Open Source LLM Observability startup, part of YCombinator W23 cohort.

Benefits

- Helicone Setup is just two lines of code change to set them up as a proxy.

- They support OpenAI, Anthropic, Anyscale, and a couple of OpenAI compatible endpoints.

Considerations

- They only do logging of requests & responses.

Pricing

- They have a generous free-tier of 50K monthly logs. - They also are open-source with MIT License.

Relevant Links

Lunary

Benefits

- Its a tracing tool that works with Langchain & OpenAI agents. It is completely model agnostic.

- Its cloud offering gives option to benchmarks models and prompts against your ideal responses.

- It also has a feature called Radar which helps in helps categorise LLM responses based on pre-defined criteria so that you can visit them later for analysis.

Considerations

- Early with regards to Open source adoption of the tool.

Pricing

It is available in open source with Apache 2.0 licence. Its free-tier allows 1K daily events only.

Relevant Links

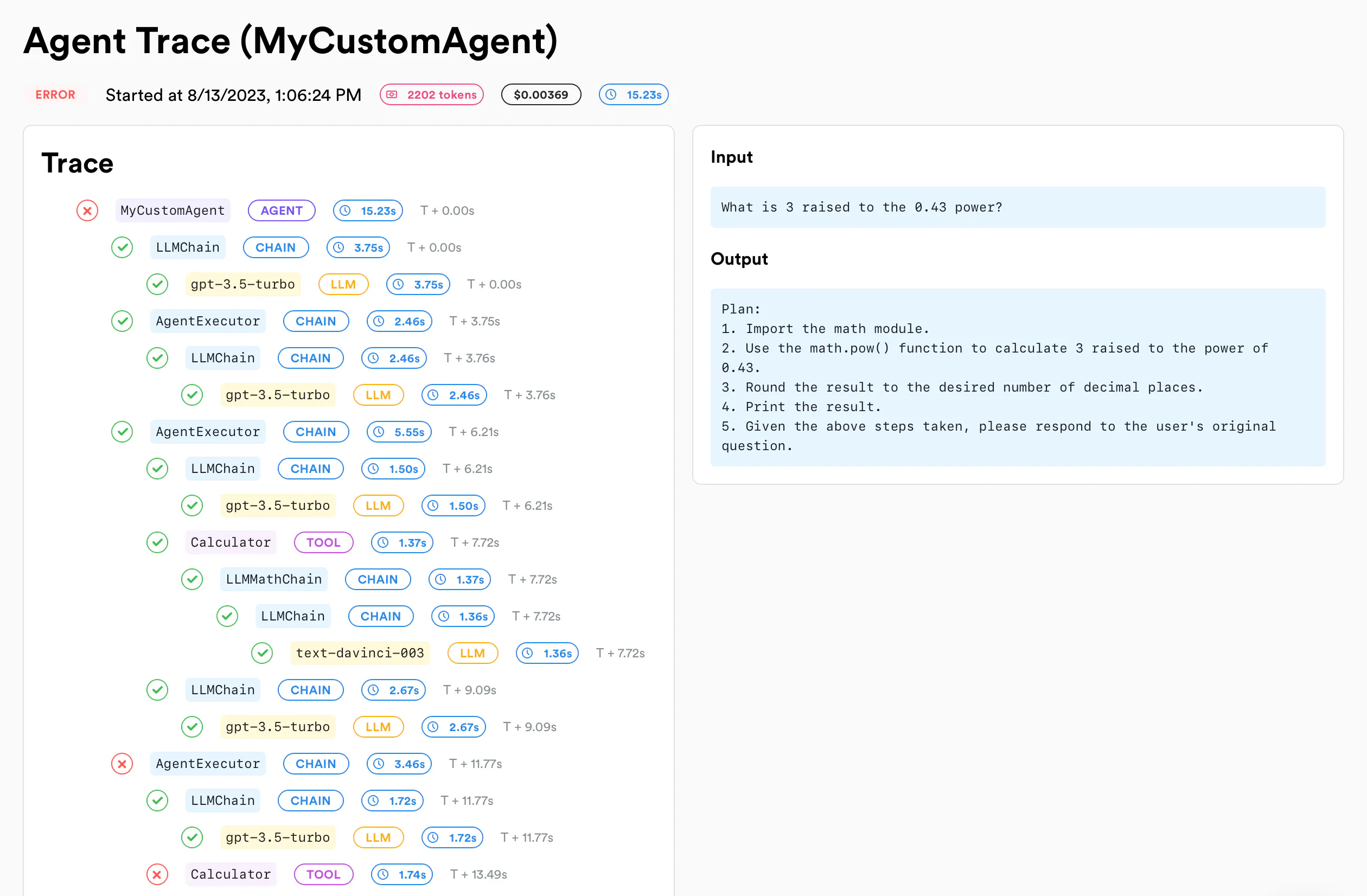

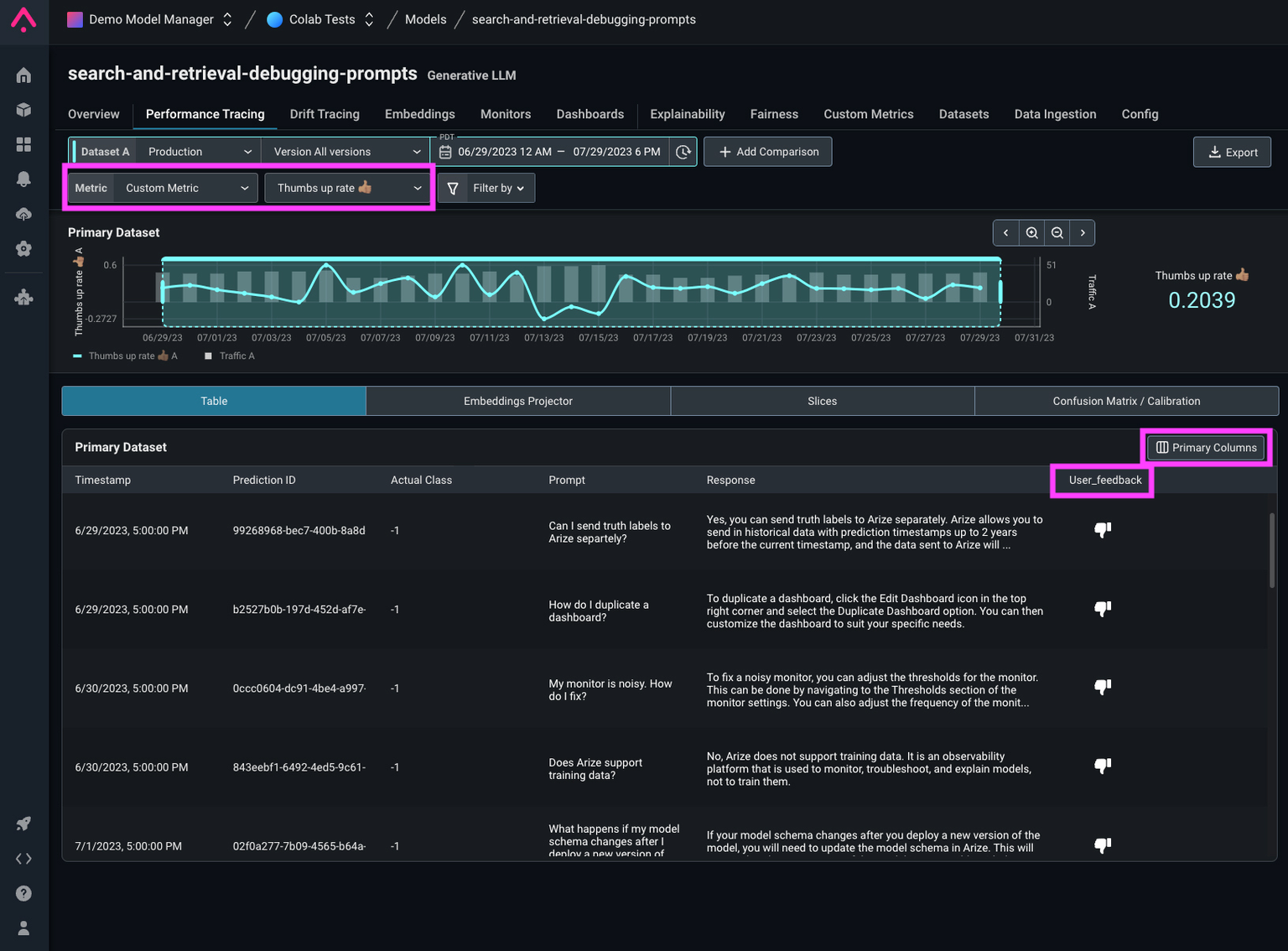

Phoenix (by Arize)

Arize is an ML Observability platform, catering for all ML / LLM models evaluation, observability and analytics.

Benefits

- Its a tracing tool that works with Langchain, LlamaIndex & OpenAI agents. It is only available in open source with ELv2 License.

- It has an in-built Hallucination detection tool which is powered by an LLM of your choice.

- OpenTelemetry compatible tracing agent.

Pricing

Freemium model

Relevant Links

Portkey

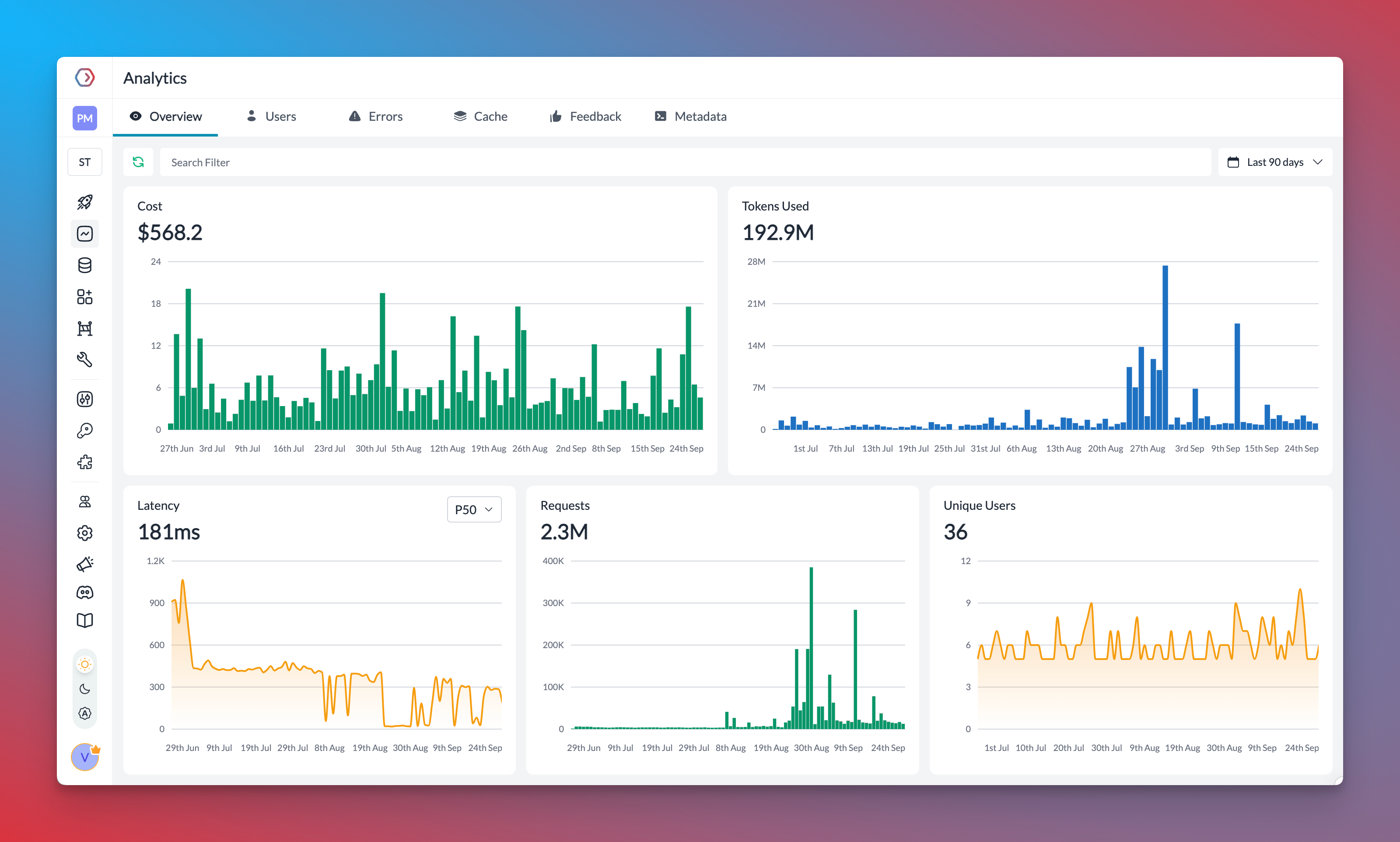

(At Doctor Droid, we use Portkey) Portkey originally got popular for their Open Source LLM Gateway that was helping abstract 100+ LLM endpoints via one API. Post that, they started working on the LLM Observability tool, which is their current focus area.

Benefits

- Portkey is a proxy where you can maintain a prompt library and just pass variables in the template to call your LLM.

- The tool manages all basic configurations for your integration like temperature. It gives options to cache response, create load balancing between models and setup fallbacks.

Considerations

- Only does logging of requests and responses. It does not trace requests.

Pricing

Its free-tier offers 10K monthly requests.

Relevant Links

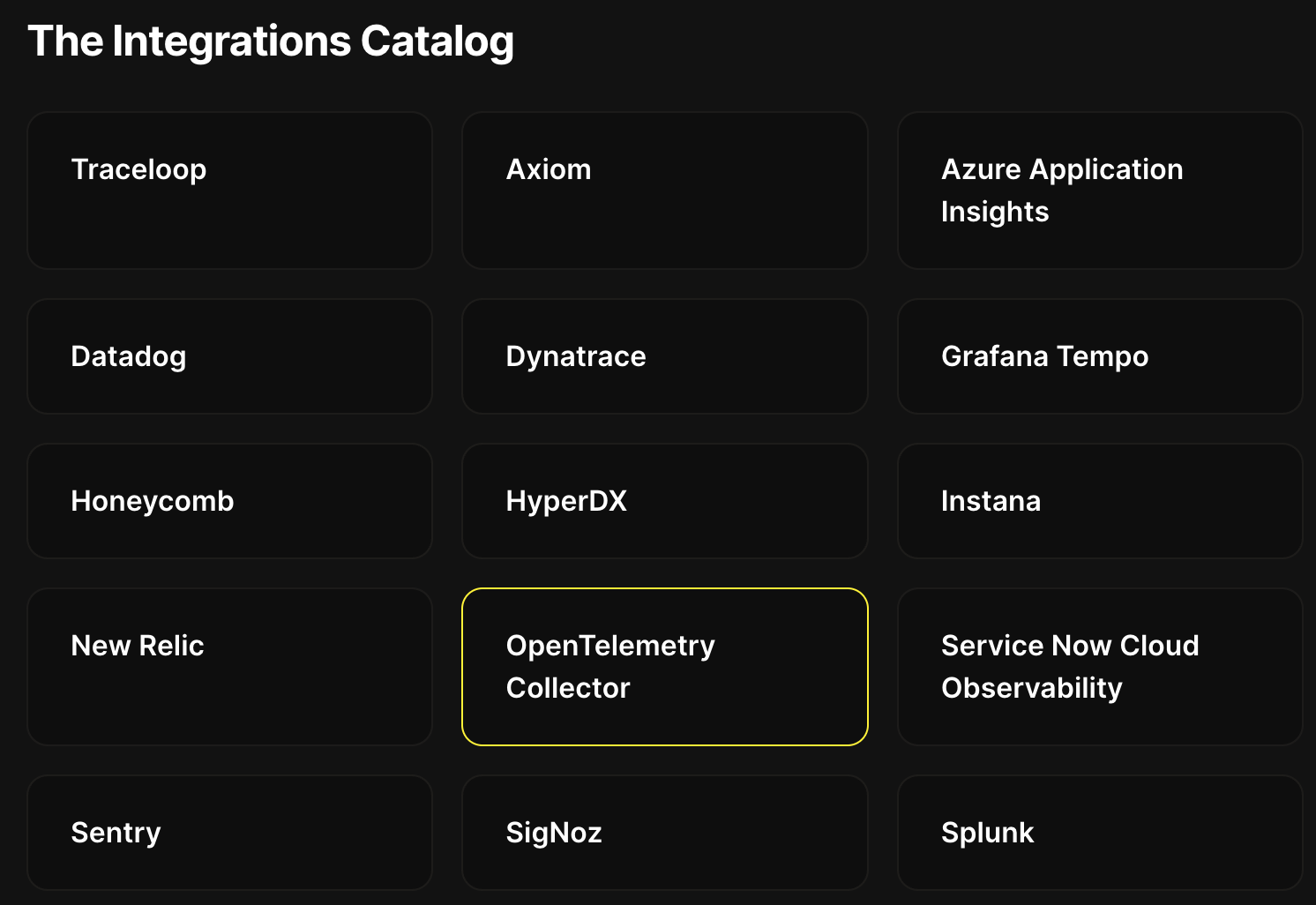

Traceloop OpenLLMetry

Traceloop is another YC W23 batch startup that helps monitoring LLM models. Their SDK OpenLLMetry helps teams forward LLM Observability logs to 10+ different tools instead of staying locked to any one tool.

Benefits

- Its a tracing tool that collects traces directly from an LLM provider or framework like Langchain & LLamaIndex and publishes them in OTel format.

- Being in OpenTelemetry format makes them compatible with multiple tools for visualisation and tracing. It is available for multiple languages.

Pricing

It offers a backend for receiving these traces as well. Free-tier offering includes 10K monthly traces. It is also open source with Apache 2.0 License.

Relevant Links

Trulens

Benefits

- They are focused more around qualitative analysis of responses.

- They inject a feedback function that does this analysis post each LLM call.

- These feedback functions act like a model that evaluates the response of the original call.

Considerations

It is only available in Python.

Pricing

It is open source with MIT License. Cloud offering is not self-serveable.

Relevant Links

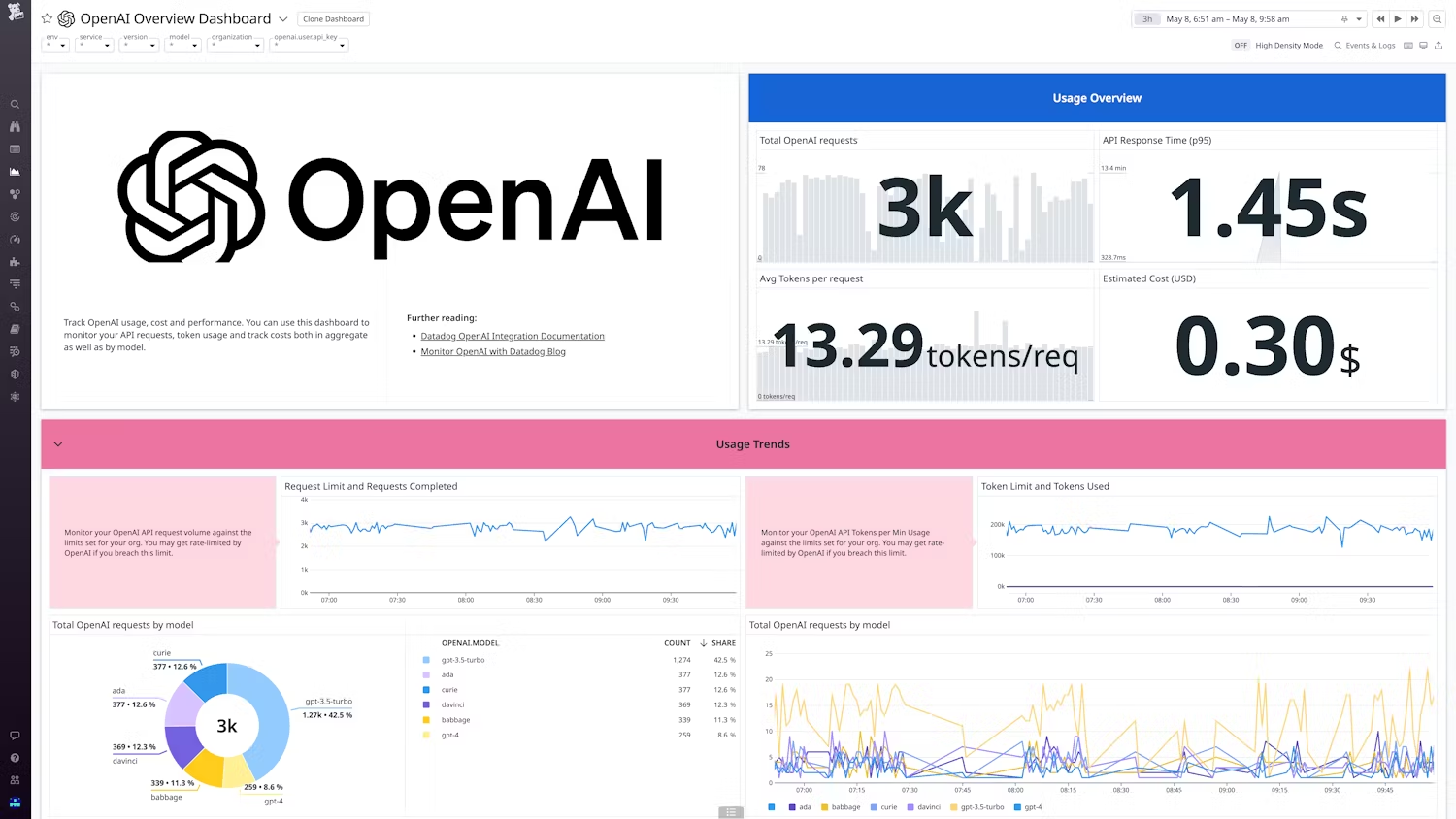

Datadog

Datadog is a popular infrastructure & application monitoring platform which has extended some of it’s integrations within the space of LLMs and related tooling. It’s interesting to see it’s out of the box dashboards for LLM Observability, yet it’s clear that there’s a lot more to be aspired from a brand like Datadog here.

Benefits

- If you are already using Datadog for tracing, then this can be enabled to setup OpenAI usage tracing with just a flag change.

- They focused on associated infrastructure monitoring like vectordbs too.

Considerations

Only supports OpenAI integrations currently with depth. There is only cloud option like the normal Datadog offering.

- They do not support LLM experimentation or iterations like the other tools mentioned above.

- Their OOB compatibility for integrations, frameworks and endpoints is limited to the top few options like OpenAI, Langchain.

Pricing

Pricing as per the Metrics / Traces usage within Datadog

Relevant Links

Details on how the setup works -: https://docs.datadoghq.com/integrations/openai/

Other tools worth checking out for LLM Observability

Benefits

While we tried to give you a detailed overview of some of the top tools in the category, here’s an additional list of tools:

Commercial

- PromptLayer

- Vellum — While Vellum does provide LLM Quality tracking capabilities, on the onset, Vellum looks designed more to create end-to-end workflows using LLMs.

- WandB (Weights & Biases) — Product primarily providing MLOps insights, also has an LLMOps offering.

Conclusion

Like any other developer tool, there is a large overlap of features amongst these tools. This is what our take is:

- If you are a startup who has just started with experiments and need quick start to log your LLM integrations, use free-tier of Langsmith or Portkey to start tracking. As you go into production and don’t want to send your data outside your environment, setup PortKey locally. it has > 4K github stars and is well adopted with a fairly sized community. Arize is getting fairly popular, can go with that.

- If you are a large company and have heavy LLM integration volumes and need a reliable solution for your tracking, use Langsmith or Datadog.

- If you are into keeping things strictly vendor neutral, go for OpenLLMetry for tracing and use the destination of your choice.

- If you are in a large company and have a very specific project for prompt improvement and fine-tuning LLM features, Lunary has very good options in its cloud offering to help you do that.

Missing from this list: an AI that actually fixes the issue →

Connect your tools and ask AI to solve it for you

Ready to cut the alert noise in 5 minutes?

Install our free slack app for AI investigation that reduce alert noise - ship with fewer 2 AM pings

Frequently Asked Questions

Everything you need to know about observability pipelines