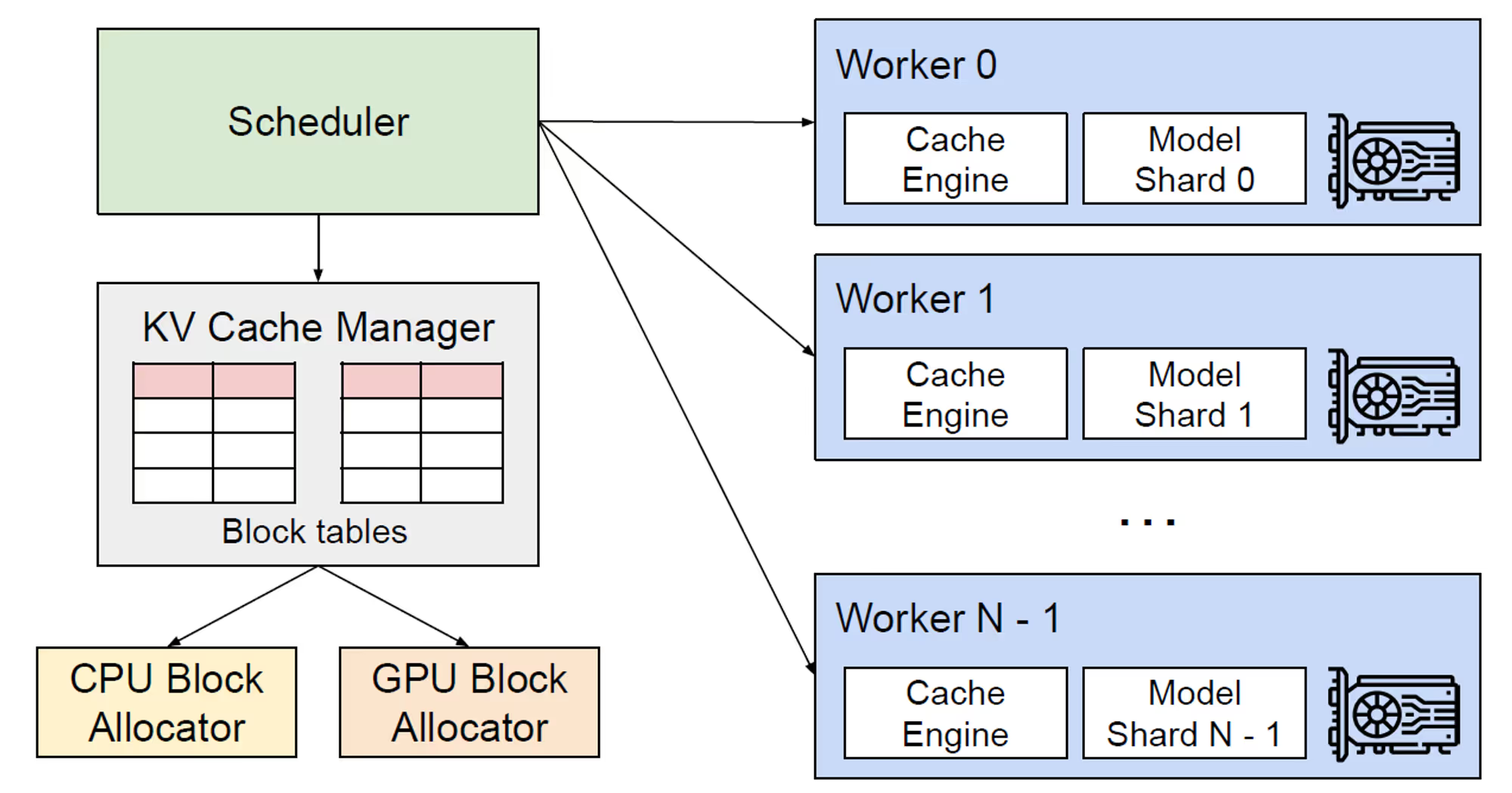

vLLM was initially introduced in a paper titled "[Efficient Memory Management for Large Language Model Serving with PagedAttention](https://arxiv.org/abs/2309.06180)," authored by Kwon et al. vLLM, short for Virtual Large Language Model, is an active open-source library designed to efficiently support inference and model serving for large language models (LLMs).

(Perfect for making buy/build decisions or internal reviews.)